The Power of Two Random Choices load balancing algorithm has piqued some curiosity. In this blog post, we see how it stacks up against other modern-day algorithms available in HAProxy.

Recently, I was asked twice about my opinion on supporting an algorithm known as the Power of Two Random Choices. Some believe that it’s the next big thing in load balancing since it was recently implemented in some other load balancers.

I took some time to read the 2001 report of the research by Mitzenmacher, Richa & Sitaraman and also the original 1996 study by Mitzenmacher. Attentive readers will note that the idea first emerged 23 years ago—before HAProxy even existed—and was further explored five years later while HAProxy was still in its infancy. I really liked the overall explanation, even though, I must confess, I quickly glanced over some of the mathematical demonstration. It sounded naturally good and efficient.

The principle is this: The algorithm decides which server will respond to each request by picking two random servers from the fleet and choosing the one with the fewest active connections. This check for active connections presents the nice property of fairness by making it harder for heavily loaded servers to be chosen while less loaded servers are available. Randomly choosing two servers makes it possible to add this fairness without needing to check each individual server’s load.

The whole purpose is to save the load balancer from the cost of having to check all servers while still making a better choice than a purely random decision. The papers discuss how the algorithm gets even better when choosing between more than two servers, although they report that the extra gains are less impressive and only become linear past two.

The principle is extremely smart while also easy to understand: by randomly picking a small number of entries among a list and then selecting the least loaded one, the probability of choosing an overloaded server decreases. This is especially true as the number of servers in the fleet grows, and the distribution of selected servers widens. The system balances itself: The wider the distribution, the fairer the outcome.

It sounds good on paper. But given that it’s been around for more than two decades and nobody has asked for it during this time, you have to wonder whether it still provides any benefit over other algorithms employed by modern load balancers.

A closer look

Let’s think about what makes the Power of Two algorithm stand apart and, at the same time, compare it with alternatives available in HAProxy.

Fairness, or the likelihood that traffic will be spread evenly across servers, is our first consideration. When load balancing equally loaded servers, you’d typically choose the Round Robin algorithm. While Round Robin and Power of Two differ in appearance, and you’d think that Round Robin would be fairer, statistically, they will provide nearly the same results.

For dealing with varying response times, HAProxy supports the Least Connections algorithm, which picks the least loaded server among all of them and not only among two like Power of Two does by default. On the face of it, the Power of Two algorithm seems like the better choice since it doesn’t have to compare the load of every server before choosing one. However, when you take a closer look, you see that it is typically only that a load balancer isn’t able to implement Least Connections correctly. When using Least Connections in HAProxy, the load balancer always knows which server has the least amount of load because it sorts them by outstanding request count into a binary tree. Finding the least loaded one is as trivial as picking the first one in the tree.

There is something that randomness provides when dealing with highly dynamic environments, though. Newly added servers aren’t swamped by connections, which can happen when a new server that has no load is inflicted by a burst of traffic until it reaches the same level of load as its peers. In contrast, a naive Least Connections algorithm would send all of the traffic to the newly added servers. Instead, HAProxy implements a slow-start mechanism that consists of progressively raising a new server’s weight over a period of time. A slow-start is usually enabled on services that need a pre-heating period, such as those relying on the Least Connections or Consistent Hashing algorithms. The fact that some other load balancers do not fully support weights may be the reason why they choose Power of Two.

With all of this in mind, the HAProxy implementation of Least Connections seems to be almost equivalent to the Power of Two algorithm. However, the studies on Power of Two address a particular, interesting point that is not sufficiently emphasized. That is, when operating multiple load balancers, which all must make an independent decision in parallel, there’s a risk that they will choose the same server and overload it. This is especially true if they base their decision on a local measurement or, in other words, pick the least loaded server without consulting one another. Some randomness might alleviate this problem.

The Random algorithm was already added to HAProxy to address this specific concern. Multiple load balancers is common in service mesh architectures where HAProxy is deployed as a sidecar proxy. In this scenario, there are as many load balancers as there are service nodes.

Given that we had already implemented Random a few months ago, adding support for an argument to configure the number of draws or servers to choose from and pick the least loaded one was absolutely trivial to do. It only required 17 lines of code. So, the motivation to perform a benchmark and get true data, rather than anecdotal evidence, started to build up.

Benchmark setup

For our benchmark tests, we needed an application and landed on a simple one made of four REST/JSON services. You can download it from our git repository. This new application, which is called MyTime, says Hello to a user and tells him/her what time it is on his/her side of the world based upon the time zone stored in the local database. For example:

| GET /MyTime/bob HTTP/1.1 | |

| Host: mytime.example.local | |

| HTTP/1.0 200 OK | |

| Server: BaseHTTP/0.6 Python/3.6.7 | |

| Date: Tue, 22 Jan 2019 16:38:11 GMT | |

| <HTML><BODY>Hello Bob Smith, your IP address is 198.18.0.7 and your local time is 10:38.</BODY></HTML> |

The application relies on a Time service that returns the current time of day, a User service that mimics a database of known users and their attributes (e.g. full name and time zone), and a Log service that logs the event represented by the user’s request. The Log service also queries Time and User to retrieve some extra information to be logged. All of these services are accessed through a REST/JSON API.

The MyTime app flowchart

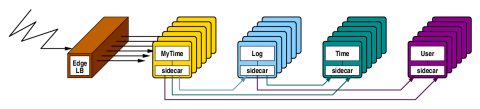

This application was deployed on six low-power, quad-core ARM servers from our lab with HAProxy as a sidecar next to each service. Since HTTP traffic has to reach the MyTime application, a seventh node was prepared with HAProxy to serve as the edge load balancer in front of the cluster.

HAProxy serving as the edge load balancer

This results in six load balancers being present in front of the Log service (the MyTime sidecars) and 12 load balancers in front of the Time and User services (MyTime and Log sidecars).

Several algorithm settings were compared under sustained load to see which would fare better:

Round Robin

Least Connections

Random (with a draw of 1)

Power of Two

In addition, given that we have an edge load balancer available, it sounded really appealing to compare this setup to the optimal case where a single load balancer is present in front of each service. So, we added an extra test with an external load balancer, which is one instance per service, running on the edge machine. In this case, it doesn’t change anything for the services, it’s just that their sidecars only know a single endpoint per service, which is the target service’s load balancer.

HAProxy serving as the external load balancer

The tests were run under three different load levels:

No contention: each service runs on a dedicated CPU core to see how the algorithms compare for services running on dedicated servers without external stress.

Moderate contention: all four services share two CPU cores to match VMs and containers running on moderately loaded servers, like in most clouds.

High contention: all four services share the same CPU core to match the case of overloaded VMs or containers with noisy neighbors.

For each test, we measure:

Application performance: the number of requests per second during the whole test.

User experience: the application’s response time during the whole test.

Load-balancing fairness: the maximum load inflicted upon each service during the test. Or, in other words, how evenly servers were chosen by a load-balancing algorithm.

Results

No contention

Testing application performance with no contention

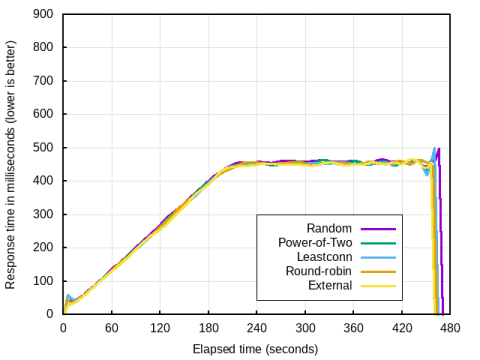

Testing user experience with no contention

Testing load balancing fairness with no contention

Under no contention, all algorithms are roughly equal. Random does the least well. Power of Two is not as good as Least Connections or Round Robin, but it is very close. This makes sense because, if there is no contention, the highest fairness should provide the smoothest distribution of load across servers and, hence, the lowest queuing and the lowest response times. But based on this, all algorithms, except for Random, could be considered equal.

Moderate contention

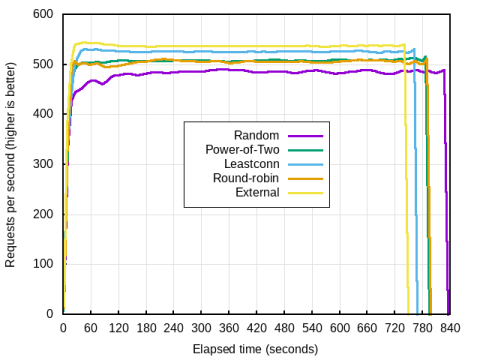

Testing application performance with medium contention

Testing user experience with medium contention

Testing load balancing fairness with medium contention

Under moderate contention, Random becomes very bad in terms of load distribution, which matches what was predicted in the studies. The request rate is around 10% lower than the best alternatives. Round-robin is not good either. Very likely, it causes some excess queuing on already slow servers and leaves them with few options to recover from transient overloads.

Power of Two shows a much better distribution than these last two, with peak connection counts about 30% lower. However, its performance in requests-per-second and response time is exactly identical to Round Robin. In that regard, it’s already better than Round Robin in this case.

Least Connections performs very well here, with peak loads about 4% lower than Power of Two. It also shows request rates about 4% higher and response times that are about 4% lower. This probably matches the paper’s prediction regarding the possibility that drawing more than two nodes will improve the overall distribution and performance. The Least Connections algorithm compares the load of all servers, not just two.

Finally, the external load balancer is, as expected, the best solution, given that it always picks the best server based on its centralized knowledge of each server’s load. Its performance is about 3% better than the distributed Least Connections (i.e. sidecars connecting directly to other service nodes) on all metrics. So, it is about 7% better than Power of Two. It’s worth noting that this extra 3% difference is not huge, but it indicates that one server could be turned off in a farm of 30 servers by simply pointing all sidecars to each service’s edge load balancer. That is, assuming that the edge load balancer is sufficiently sized.

It was also interesting to see that the complete test finished 26 seconds faster on Least Connections than on Power of Two or Round Robin. The external load balancer test finished 38 seconds faster than Power of Two or Round Robin. While most often, this doesn’t matter for web-facing applications, it definitely affects microservice architectures that are comprised of long service chains. In that scenario, the total processing time directly depends on the processing time of individual services.

High contention

Testing application performance with high contention

Testing user experience with high contention

Testing load-balancing fairness testing with high contention

Under high contention, the load distribution provided by the Random algorithm is disastrous. Round Robin is also quite poor.

Power of Two manages to reduce the peak load on the servers beyond what others manage to do. Interestingly, it is actually an imbalance that causes this reduction due to the competition between the Time and the User services. Time was granted less CPU time, and User was granted more, but they still achieved the same results. It’s possible that we’re observing some artifacts of the scheduler’s tick (4 ms at 250 Hz), which fixes the period of time during which a task can run uninterrupted.

Regarding the measured performance, Power of Two remains at the exact same request rate and response time as Round Robin. Least Connections consistently remains about 4% better in both reports. The centralized load balancer is even 3% better.

Here, the complete test finished 24 seconds earlier on Least Connections than on Power of Two or Round Robin. The external load balancer test finished 36 seconds earlier than Power of Two or Round Robin.

Analysis

As expected, in all cases, relying on an external, central load balancer is better in environments with moderate or high contention for system resources. This is quickly followed by the Least Connections algorithm, then by Power of Two. Finally, Round Robin, which is not very good in this case, and the Random algorithm, which creates significant peaks and has a distribution that likely follows a long tail, round out the set.

Power of Two consistently gives the same performance regarding requests per second and average response time as Round Robin. However, it has a much better distribution under load. It makes sense to always use it as an alternative to Round Robin when a good Least Connections implementation is unavailable. Least Connections consistently performs better.

How is it possible that Least Connections works better than Power of Two in a distributed environment? Very likely, the explanation stems from the Round Robin effect that happens between two similarly loaded servers in Least Connections. Indeed, if one load balancer sees servers A and B as equal and another load balancer sees servers A and C as equal, a naive Least Connections will pick A for both of them. That would result in server A taking twice the expected load. In the case of HAProxy’s Least Connections, it depends on the order in which they were released. For two pairs of servers seen by two load balancers, you can have four possible outcomes:

LB 1 selects | LB 2 selects | Outcome |

A | A | Bad |

A | C | Good |

B | A | Good |

B | C | Good |

As you can see, the case that would overload the same server from two load balancers picking it only happens a quarter of the time. When using three LBs, it would be even lower. With more servers, it’s even lower. So this form of randomness is already present in the Least Connections algorithm’s construction. HAProxy always tries to maximize the interval between two picks of the same server.

Conclusion

At the very least, Power of Two is always better than Random. So, we decided to change the default number of draws for the Random algorithm from one to two, matching Power of Two. This will improve the quality of the Random algorithm’s distribution. It, of course, also supports weights. This will be available in the 2.0 release, wherein you can set the number of draws as a parameter when using the Random algorithm.

The HAProxy implementation of Least Connections is already significantly better than Power of Two and matches the highest degree of accuracy that the theoretical, best scenario version of the algorithm can produce: comparing all servers within the fleet. It avoids inflicting bursts of traffic onto a single server and, so, is perfectly suitable for both single and distributed load balancer deployments.

As explained in the papers cited, Power of Two was designed as a poor man’s Least Connections to be used in situations where a true Least Connections would be impractical, difficult to implement, or come with a significant performance hit. While it’s an excellent workaround, especially as an alternative to Round Robin, whenever you have the choice, you should still prefer Least Connections since it demonstrates better server response times and reduces the cost of server load and processing power.

Furthermore, whenever possible (i.e. if it doesn’t induce any extra cost), you should prefer to configure the sidecars to always pass through a central load balancer as it improves performance by about 3% over the distributed Least Connections. It is also 7% better than Power of Two running on sidecars.

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.