Synopsys

At HAProxy Technologies, we do provide professional services around HAPRoxy: this includes HAProxy itself, of course, but as well as the underlying OS tuning, advice and recommendation about the architecture and sometimes we can also help customers troubleshoot application layer issues.

We don’t fix issues for the customer, but using the information provided by HAProxy, we are able to reduce the investigation area, saving customers time and money.

The story I’m relating today is an issue of one of these PS.

One of our customers is a hosting company that hosts some very busy PHP / MySQL websites. They used successfully HAProxy in front of their application servers.

They used to have a single MySQL server which was some kind of SPOF and which had to handle several thousand requests per second.

Sometimes, they had issues with this DB: it was like the clients (hence the Web servers) can’t hang when using the DB.

So they decided to use MySQL replication and build an active/passive cluster. They also decided to split reads (SELECT queries) and writes (DELETE, INSERT, UPDATE queries) at the application level.

Then they were able to move the MySQL servers behind HAProxy.

Enough for the introduction 🙂 Today’s article will discuss HAProxy and MySQL at high request rates, and an error some of you may already have encountered: TCP source port exhaustion (the famous high number of sockets in TIME_WAIT).

Diagram

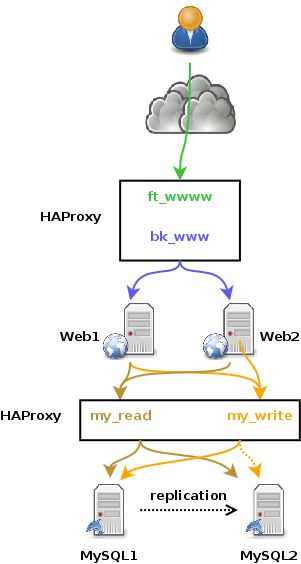

So basically, we have here a standard web platform that involves HAProxy to load-balance MySQL:

| ||

The MySQL Master server is used to send WRITE requests and the READ request is “weighted-ly” load-balanced (the slaves have a higher weight than the master) against all the MySQL servers.

MySql Scalability

One way of scaling MySQL, is to use the replication method: one MySQL server is designed as master and must manage all the write operations (DELETE, INSERT, UPDATE, etc…). for each operation, it notifies all the MySQL slave servers. We can use slaves for reading only, offloading these types of requests from the master.

The replication method allows scalability of the reading part, so if your application requires much more writes, then this is not the method for you.

Of course, one MySQL slave server can be designed as a master when the master fails! This also ensures MySQL high-availability.

So, Where Is the Problem ???

This type of platform works very well for the majority of websites. The problem occurs when you start having a high rate of requests. By high, I mean several thousand per second.

TCP source port exhaustion

HAProxy works as a reverse-proxy and so uses its own IP address to get connected to the server.

Any system has around 64K TCP source ports available to get connected to a remote IP:port. Once a combination of “source IP:port => dst IP:port” is in use, it can’t be re-used.

First lesson: you can’t have more than 64K opened connections from a HAProxy box to a single remote IP:port couple. I think only people load-balancing MS Exchange RPC services or SharePoint with NTLM may one day reach this limit…

(well, it is possible to workaround this limit using some hacks we’ll explain later in this article)

Why does TCP port exhaustion occur with MySQL clients???

As I said, the MySQL request rate was a few thousands per second, so we never ever reach this limit of 64K simultaneous opened connections to the remote service…

What’s up then???

Well, there is an issue with MySQL client library: when a client sends its “QUIT” sequence, it performs a few internal operations before immediately shutting down the TCP connection, without waiting for the server to do it. A basic tcpdump will show it to you easily.

Note that you won’t be able to reproduce this issue on a loopback interface, because the server answers fast enough… You must use a LAN connection and 2 different servers.

Basically, here is the sequence currently performed by a MySQL client:

Mysql Client ==> "QUIT" sequence ==> Mysql Server

Mysql Client ==> FIN ==> MySQL Server

Mysql Client <== FIN ACK <== MySQL Server

Mysql Client ==> ACK ==> MySQL ServerThis leads the client connection to remain unavailable for twice the MSL (Maximum Segment Life) time, which means 2 minutes.

This type of close has no negative impact when the connection is made over a UNIX socket.

Explication of the issue (much better than I could explain it myself):

“There is no way for the person who sent the first FIN to get an ACK back for that last ACK. You might want to reread that now. The person that initially closed the connection enters the TIME_WAIT state; in case the other person didn’t really get the ACK and thinks the connection is still open. Typically, this lasts one to two minutes.” (Source)

Since the source port is unavailable for the system for 2 minutes, this means that over 534 MySQL requests per second you’re in danger of TCP source port exhaustion: 64000 (available ports) / 120 (number of seconds in 2 minutes) = 533.333.

This TCP port exhaustion appears on the MySQL client server itself, but as well on the HAProxy box because it forwards the client traffic to the server… And since we have many web servers, it happens much faster on the HAProxy box !!!!

Remember: at spike traffic, my customer had a few thousands requests/s….

How to avoid TCP source port exhaustion?

Here comes THE question!!!!

First, a “clean” sequence should be:

Mysql Client ==> "QUIT" sequence ==> Mysql Server

Mysql Client <== FIN <== MySQL Server

Mysql Client ==> FIN ACK ==> MySQL Server

Mysql Client <== ACK <== MySQL ServerActually, this sequence happens when both MySQL client and server are hosted on the same box and uses the loopback interface, that’s why I said sooner that if you want to reproduce the issue you must add “latency” between the client and the server and so use 2 boxes over the LAN.

So, until MySQL rewrite the code to follow the sequence above, there won’t be any improvement here!!!!

Increasing source port range

By default, on a Linux box, you have around 28K source ports available (for a single destination IP:port):

$ sysctl net.ipv4.ip_local_port_range

net.ipv4.ip_local_port_range = 32768 61000In order to get 64K source ports, just run:

[sourcecode]$ sudo sysctl net.ipv4.ip_local_port_range=”1025 65000″[/sourcecode]

And don’t forget to update your /etc/sysctl.conf file!!!

This should be applied on the web servers as well.

Allow usage of source port in TIME_WAIT

A few sysctls can be used to tell the kernel to reuse faster the connection in TIME_WAIT:

net.ipv4.tcp_tw_reuse

net.ipv4.tcp_tw_recycletw_reuse can be used safely, be but careful with tw_recycle… It could have side effects. Same people behind a NAT might be able to get connected on the same device. So only use if your HAProxy is fully dedicated to your MySql setup.

anyway, these sysctls were already properly setup (value = 1) on both HAProxy and web servers.

This should be applied on the web servers as well.

tw_reuse should also be applied on the web server.

Using multiple IPs to get connected to a single server

In HAProxy configuration, you can precise on the server line the source IP address to use to get connected to a server, so just add more server lines with different IPs.

In the example below, the IPs 10.0.0.100 and 10.0.0.101 are configured on the HAProxy box:

[...]

server mysql1 10.0.0.1:3306 check source 10.0.0.100

server mysql1_bis 10.0.0.1:3306 check source 10.0.0.101

[...]This allows us to open up to 128K source TCP port…

The kernel is responsible to affect a new TCP port when HAProxy requests it. Dispite improving things a bit, we still reach some source port exhaustion… We could not get over 80K connections in TIME_WAIT with 4 source IPs…

Let HAProxy manage TCP source ports

You can let HAProxy decides which source port to use when opening a new TCP connection, on behalf of the kernel. To address this topic, HAProxy has built-in functions which make it more efficient than a regular kernel.

Let’s update the configuration above:

[...]

server mysql1 10.0.0.1:3306 check source 10.0.0.100:1025-65000

server mysql1_bis 10.0.0.1:3306 check source 10.0.0.101:1025-65000

[...]We managed to get 170K+ connections in TIME_WAIT with 4 source IPs… and not source port exhaustion anymore !!!!

Use a memcache

Fortunately, the devs from this customer are skilled and write flexible code 🙂

So they managed to move some requests from the MySQL DB to a memcache, opening much less connections.

Use MySQL persistent connections

This could prevent fine Load-Balancing on the Read-Only farm, but it would be very efficient on the MySQL master server.

Conclusion

If you see some SOCKERR information messages in HAProxy logs (mainly on health check), you may be running out of TCP source ports.

Have skilled developers who write flexible code, where moving from a DB to another is made easy

This kind of issue can happen only with protocols or applications which make the client close the connection first

This issue can’t happen on HAProxy in HTTP mode, since it let the server closes the connection before sending a TCP RST