Recently, we added powerful new K8s features to HAProxy Fusion Control Plane—enabling service discovery in any Kubernetes or Consul environment without complex, technical workarounds.

We've covered the headlining features in our HAProxy Fusion Control Plane 1.2 LTS release blog. But while service discovery, external load balancing, and multi-cluster routing are undeniably beneficial, context helps us understand their impact.

Here are some key takeaways from our latest Kubernetes developments and why they matter.

HAProxy already has an Ingress Controller. Why start building K8s routing around HAProxy Fusion Control Plane?

While we've long offered HAProxy Enterprise Kubernetes Ingress Controller to help organizations manage load balancing and routing in Kubernetes, some use cases have required technical workarounds. Some users have also desired a K8s solution that mirrors their familiar experiences with load balancers. Resultantly, we've redefined Kubernetes load balancing with HAProxy Fusion for the following reasons:

The Ingress API wasn't designed to functionally emulate a typical load balancer and doesn't support external load balancing.

Ingress-based solutions can have a steeper learning curve than load balancers, and users don't want to manage individualized routing for hundreds of services.

Organizations that favor flexibility and choose to run K8s in public clouds (due to Ingress limitations) are often forced to use restrictive, automatically provisioned load balancers. Those who also need to deploy on-premises face major hurdles.

External load balancing for public clouds is relatively simple—with public cloud service integration being a key advantage—but instantly becomes more complicated in an on-premises environment. Those integrations simply didn’t exist until now.

Few (if any) solutions were available to tackle external load balancing for bare metal K8s. And if you wanted to bring your external load balancer to the public cloud, the need for an ingress controller plus an application load balancer (ALB) quickly inflated operational costs.

All users can now manage traffic however they want with HAProxy Fusion 1.2. Our latest release brings K8s service discovery, external load balancing, and multi-cluster routing to HAProxy Enterprise. You no longer need an ingress controller to optimize K8s application delivery—and in some cases, HAProxy Fusion-managed load balancing is easier.

Easing the pain of common K8s deployment challenges

Routing external traffic into your on-premises Kubernetes cluster can be tricky. It's hard to expose pods and services spread across your entire infrastructure, because you don’t have the automated integration with external load balancers that public clouds brought to the Kubernetes world.

Here's what Spectro Cloud's 2023 State of Production Kubernetes report found:

98% of the report's 333 IT and AppDev stakeholders face one or more of K8s' most prominent deployment and management challenges.

Most enterprises operate 10+ Kubernetes clusters in multiple hosting environments, and 14% of those enterprises manage over 100 clusters!

83% of interviewees had two or (many) more distributions across different services and vendors (including AWS EKS-D, Red Hat OpenShift, etc.).

The takeaway? Kubernetes remains universally challenging to effectively deploy and manage. Meanwhile, the increased scale and complexity of K8s distributions is magnifying those issues organizations are grappling with.

Plus, Kubernetes adoption is through the roof as containerization gathers steam. The value of developing a multi-cluster, deployment-agnostic K8s load balancing solution is immense—as is the urgency.

HAProxy helps solve common challenges around bring-your-own external load balancing practices, network bridging, pod management, and testing.

It all starts with service discovery

Without successfully exposing your pod services (or understanding your deployment topology), it's tough to set up traffic routing and load balancing. This also prevents services from being dynamically aware of one another without hard coding or tedious endpoint configuration. A dynamic approach is crucial since K8s pods and their IP addresses are ephemeral—or short-lived. Service discovery solves these issues, but not all approaches are equal.

Kubernetes setups without a load balancer commonly enable service discovery through a service registry. This happens on the client side and can complicate the logic needed for pod and container awareness. HAProxy server discovery is server-side since the load balancer does the work of connecting to services and retrieving information on active pods.

Understanding the HAProxy advantage

Service discovery now lives in HAProxy Fusion Control Plane within a dedicated UI tab, though the underlying Kubernetes API powers that function. HAProxy Fusion links to the K8s API, which lets HAProxy Enterprise dynamically update service configurations and automatically push those updates to your cluster(s). Using HAProxy Enterprise instances to route traffic, in conjunction with HAProxy Fusion Control Plane, has some unique advantages:

Layer 4 (TCP) and Layer 7 (HTTP) load balancing without having to separately manage Ingress services or Gateway API services

Centralized management and observability

Easier configuration language without complicated annotations

Multi-cluster routing

HAProxy Enterprise can now perform external load balancing for on-premises Kubernetes applications, configured via HAProxy Fusion Control Plane. HAProxy Fusion is aware of your Kubernetes infrastructure, and HAProxy Enterprise can sit inside or outside of your Kubernetes cluster.

More traffic, fewer problems

HAProxy Enterprise also treats TCP traffic as a first-class citizen and includes powerful multi-layered security features:

Web application firewall

…and more

External load balancing (and multi-cluster routing) leverages normal HAProxy Enterprise instances for traffic management. We can now automatically update load balancer configurations for backend pods running behind them.

Aside from external load balancing, HAProxy Fusion Control Plane and HAProxy Enterprise play well with cloud-based Kubernetes clusters. In instances where you're otherwise paying for multiple load balancing services (as with AWS, for example), this tandem can help cut costs. Greater overall simplicity, speed, and consolidation are critical wins for users operating within a complex application environment.

Leverage external load balancing and multi-cluster routing in any environment

Automated scaling, unique IP and hostname assignments, and service reporting are major tenets of HAProxy’s external load balancing. So, how do the pieces fit together?

Clients using a Kubernetes-backed application connect to the internet and generate web requests based on their usage.

These requests flow into HAProxy Enterprise, which routes app traffic to the appropriate pod(s) in a K8s cluster, and the cluster continually shares service and type information directly with HAProxy Fusion Control Plane (service discovery).

HAProxy Fusion is also bound to your HAProxy Enterprise instances, enabling observability, management, and improved scalability.

HAProxy Enterprise uses IP Address Management (IPAM) to manage blocks of available IP addresses. We can automatically grab load balancer service objects and create a public IP bind using that information. Administrators can create their own IPAM definitions within their K8s configuration and then create a load balancer service. The load balancer status, IP, binds, and servers are available in K8s and your HAProxy Enterprise configuration. This closely mirrors the external load balancing experience in a public cloud environment.

Running a single K8s cluster has always been cumbersome for organizations that value high availability, A/B testing, blue/green deployments, or multi-region flexibility. Our earlier statistics also support just how pervasive multi-cluster setups are within Kubernetes.

HAProxy’s multi-cluster routing is based around one central requirement: you need to load balance between multiple K8s clusters that are active/active, active/passive, or spread across multiple regions. Here's how HAProxy Fusion and HAProxy Enterprise support a few important use cases.

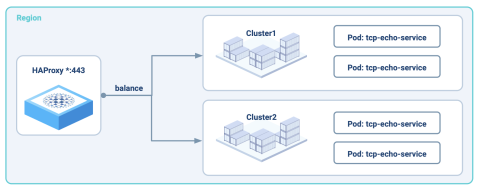

Multi-cluster routing example #1: multiple simultaneous clusters

Organizations often want to balance network traffic across multiple clusters. These clusters could be running the same version of an application within clusters across different availability zones. HAProxy Enterprise and HAProxy Fusion let you run your load balancer instances in either active/active or active/passive mode, depending on your needs.

This setup is pretty straightforward:

Your HAProxy Enterprise instance and Kubernetes clusters are contained within one region.

HAProxy Enterprise routes traffic between two or more clusters and their pods using standard load-balancing mechanisms.

Multi-cluster routing example #2: A/B testing and blue/green deployments

Organizations often use A/B testing to compare two versions of something to see what performs better. For applications, this involves sending one portion of users to Cluster 1 (for Test A) and Cluster 2 (for Test B) where different app versions are waiting for them.

Blue/green deployments work quite similarly, but we're transitioning traffic gradually from one application version to another. This only happens once the second cluster is ready to accept traffic. As a result, you can avoid downtime and switch between applications as needed.

Two HAProxy Enterprise instances, running either in active/active or active/passive mode, act as endpoints that route traffic between clusters.

Some clients are sent to the application version contained within Cluster 1, while HAProxy sends the other group to Cluster 2.

Over time, you can tune these traffic flows and direct them to the appropriate pods according to server load, user feedback, or evolving deployment goals.

Multi-cluster routing example #3: multi-region failover

Having a global Kubernetes infrastructure is highly desirable, but stretching a single cluster across multiple regions isn't readily possible. Networking, storage, and other factors can complicate deployments—highlighting the need for a solution. Having more clusters and pods at your disposal means unwavering uptime, which is exactly what this setup is geared towards.

Each region can run one or more Kubernetes clusters with HAProxy instances in front of them. Should one of the Kubernetes clusters fail, HAProxy can automatically send traffic to the other cluster, without disrupting user traffic. The one tradeoff is slightly higher latency before you recover your services.

We have an HAProxy Enterprise instance running in region one (e.g. US-East-1) and an HAProxy Enterprise instance running in region two (e.g. US-West-2).

Each HAProxy instance routes traffic to an assigned regional cluster.

If any backend pods fail, we can reroute traffic to another cluster in the other region without impacting availability.

Learn more about the power of external load balancing and multi-cluster routing

Thanks to automated service discovery in HAProxy Fusion and seamless integration with HAProxy Enterprise, we can now address many common pain points associated with Kubernetes deployments. On-premises external load balancing must be as easy as it is for public clouds, and load balancing between clusters is critical for uptime, scalability, and testing purposes. Our latest updates deliver these capabilities to our customers.

External load balancing and multi-cluster routing are standard in HAProxy Enterprise. HAProxy Fusion ships with HAProxy Enterprise at no added cost, unlocking these powerful new functions.

However, we have so much more to talk about! Check out our webinar to dive even deeper.

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.