HAProxy gives you an arsenal of sophisticated countermeasures including deny, tarpit, silent drop, reject, and shadowban to stop malicious users.

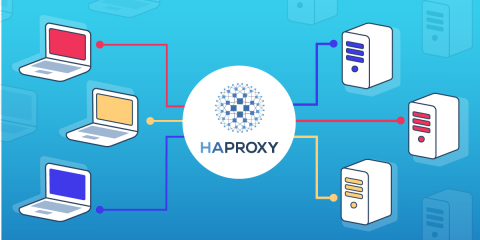

There are two phases to stopping malicious users from abusing your website and online applications. Step one is detection, step two is deploying countermeasures. HAProxy is more powerful than nearly every other load balancer when it comes to both detection and countermeasures. That’s due to its built-in ability to track clients across requests, flag abusive clients, and then take action to stop them.

For detection, you can use HAProxy stick tables to expose anomalous behavior. Stick tables are key-value storage built into HAProxy where you increment counters that track how often a client has done a certain action. For example, you can see how often a client has accessed a particular webpage, monitor how many errors they’ve triggered, or count their total number of concurrent connections. When tracked over time, these signals uncover malicious activity, which you can set rules for and prevent.

For the countermeasures phase, HAProxy lets you set rules called Access Control Lists (ACLs), which categorize clients as malicious or not. For instance, if a client has triggered a lot of 404 Page Not Found errors, it’s a sign that they may be scanning your website for vulnerabilities. You would create an ACL that flags these users. Read our blog post Introduction to HAProxy ACLs to learn the basics of setting up ACLs in HAProxy. Once you’ve flagged a client, you can apply a response policy that stops them from doing what they’re doing. Response policies define which action you’ll take.

In this post, we’ll focus on the many response policies that HAProxy has to offer, including the following:

Deny

Tarpit

Silent Drop

Reject

Shadowban

#1 Deny

This is the most straightforward response policy. No frills, just deny the request immediately and send back an error code. The client gets instant feedback that their request was stopped and you free up computer resources that would otherwise be used to service the request. It also lets you indicate to the client why they’ve been denied. For example, if they’ve exceeded your request rate limit, you can respond with a 429 Too Many Requests status to tell them so. Giving back a relevant error status can, like good karma, help you in the long run, in case you accidentally trigger the rule yourself.

Here is an example that denies an incoming request if the client has sent too many requests during the last minute. I won’t go into much detail about how to configure a rate limit threshold or how the ACL too_many_requests has been defined. See our blog post HAProxy Rate Limiting: Four Examples for more info.

| frontend www | |

| bind :80 | |

| default_backend webservers | |

| # use a stick table to track request rates | |

| stick-table type ip size 100k expire 2m store http_req_rate(1m) | |

| http-request track-sc0 src | |

| # Deny if they exceed the limit | |

| acl too_many_requests sc_http_req_rate(0) gt 20 | |

| http-request deny deny_status 429 if too_many_requests |

The last line uses http-request deny to stop processing of the request and immediately return a 429 Too Many Requests error. Note that this works even though I’ve placed the default_backend line earlier. HAProxy intelligently sorts the rules to fire before relaying the request to the backend. You can also try this without denying the request and only log the activity by using the http-request capture directive, like this:

| frontend www | |

| bind :80 | |

| default_backend webservers | |

| # use a stick table to track request rates | |

| stick-table type ip size 100k expire 2m store http_req_rate(1m) | |

| http-request track-sc0 src | |

| # Log if they exceed the limit | |

| acl too_many_requests sc_http_req_rate(0) gt 20 | |

| http-request set-var(txn.ratelimited) str(RATE-LIMITED) if too_many_requests | |

| http-request capture var(txn.ratelimited) len 12 |

The log line will show RATE-LIMITED if the request is flagged. Or, if you would like to try the example without rate limiting to get a feel for how it works, swap the condition too_many_requests with the built-in ACL TRUE, which triggers the rule for all requests:

| frontend www | |

| bind :80 | |

| default_backend webservers | |

| http-request deny deny_status 429 if TRUE |

Here’s the result:

A 429 Too Many Requests error

Apart from applying this as a punishment for improper client behavior, it can also serve as a feedback mechanism. Let’s say that you’re protecting an API from overuse by any single client; You can add a rate limit and if a client exceeds it, you deny their requests and give them back a 429 Too Many Requests error. That client can be designed to expect this response and, when it sees it, dial back its usage, perhaps by enabling a wait-and-retry period. So, client programs that call your API can use your error codes as guides for how to modify their behavior dynamically.

HAProxy supports the following response status codes:

Status code | Meaning |

200 | OK |

400 | Bad Request |

401 | Unauthorized |

403 | Forbidden |

404 | File Not Found |

405 | HTTP Method Not Allowed |

407 | Proxy Authentication Required |

408 | Request Timeout |

410 | Gone |

425 | Too Early |

429 | Too Many Requests |

500 | Server Error |

502 | Bad Gateway |

503 | Service Unavailable |

504 | Gateway Timeout |

If you need to provide more information to the client about why their request was denied, then use the hdr argument to return an HTTP header with additional information. Consider this example that returns a header called Denial-Reason with a value of Exceeded rate limit:

| frontend www | |

| bind :80 | |

| default_backend webservers | |

| # use a stick table to track request rates | |

| stick-table type ip size 100k expire 2m store http_req_rate(1m) | |

| http-request track-sc0 src | |

| # Deny if they exceed the limit | |

| acl too_many_requests sc_http_req_rate(0) gt 20 | |

| http-request deny deny_status 429 hdr Denial-Reason "Exceeded rate limit" if too_many_requests |

You can also use fetch methods, such as the sc_http_req_rate method to show what their request rate was:

| http-request deny deny_status 429 hdr Denial-Reason "Exceeded rate limit. You had: %[sc_http_req_rate(0)] requests." if too_many_requests |

However, not all clients will know to look at the HTTP headers to learn why they were blocked. If you want to send back information in the response body itself, which is more visible, use the string or lf-string argument, which indicates the text to return when the rule is triggered. Using string returns a raw string, while lf-string returns a log-formatted string that can contain fetch methods. This must be accompanied by the content-type argument, as shown here:

| http-request deny deny_status 429 content-type text/html lf-string "<p>Per our policy, you are limited to 20 requests per minute, but you have exceeded that limit with %[sc_http_req_rate(0)] requests per minute.</p>" if too_many_requests |

The result:

A 429 error with body text

#2 Tarpit

Sometimes, sending back a prompt deny response is not enough to deter malicious users, such as automated programs (i.e. bots) attacking your site. They’ll simply retry the connection again and again. In fact, no amount of error responses will stop them from carrying out the cold calculations of their programming. In those cases, you can tarpit them. It’s a lot like answering a phone call from a robocaller, but then leaving your phone on the kitchen counter and walking away. The caller is still on the line, awaiting your reply. Eventually, you’ll hang up, but you’ve tied them up for a few minutes.

HAProxy’s tarpit response policy accepts a client’s connection but then waits a predefined time before returning a denied response. It can be enough to exhaust the resources of a persistent bot. Here’s an example; Again, I’m using the built-in TRUE ACL so that this policy is triggered for every request to make it easy to see it in action:

| frontend www | |

| bind :80 | |

| default_backend webservers | |

| timeout tarpit 10s | |

| http-request tarpit deny_status 403 if TRUE |

Here, we’re using a directive called timeout tarpit to set how long HAProxy should wait before returning a response to the client. In this case, I’ve set it to 10 seconds. As with the deny response policy, tarpit accepts a deny_status, which you can set to any of the available status codes, and you can also set custom HTTP headers and response strings.

#3 Silent Drop

Tarpitting malicious clients slows them down, but it can be a double-edged sword. In order to keep a connection open with a client, HAProxy must tie up one of its own connection slots. If an attacker owns a huge army of bots, they can eventually overwhelm the load balancer with their requests. When it gets to that point, you still have a powerful weapon at your disposal: the silent drop. Silently dropping a client means HAProxy immediately disconnects on its end, but the client is never notified and continues to wait. In fact, they will wait forever.

Consider the following example:

| frontend www | |

| bind :80 | |

| default_backend webservers | |

| http-request silent-drop if TRUE |

The client establishes a connection, sends their request, and then waits for a response. However, one never comes. From a technical standpoint, HAProxy uses various techniques to avoid sending the expected TCP reset packet, which would alert the client that the connection has been closed. The advantage that this has over tarpitting is that HAProxy consumes no resources since it no longer needs to maintain a connection to the client.

Just know that if you have any stateful equipment, such as stateful firewalls, other proxies, or other load balancers, between HAProxy and the client, then they will also keep the established connection, to their detriment. However, some proxies and load balancers, such as the ALOHA, offer Direct Server Return mode, which rewrites the connection so that the response bypasses the proxy on the outbound path. If your equipment has this ability, then you could use silent drop without issue.

#4 Reject

In situations where you want to deny the request immediately without forcing the client to wait, but without sending back any response, you can use a reject policy. A perfect example would be when you’ve blacklisted an IP address. In that case, is it worth sending any response at all? You’ve actually got three variations of this policy, depending on the phase during which you’d like to end the connection:

http-request reject: Closes the connection without a response after a session has been created and the HTTP parser has been initialized. Use this if you want to trigger the rule depending on Layer 7 attributes, such as cookies, HTTP headers or the requested URL.tcp-request content reject: Closes the connection without a response once a session has been created, but before the HTTP parser has been initialized. Use this if you don’t need to read Layer 7 attributes since this happens during an earlier phase before the HTTP parser has been initialized. You will still see the request in your logs and on the HAProxy Stats page.tcp-request connection reject: Closes the connection without a response at the earliest point, before a session has been created. Use this if you don’t care to see the request in your logs or on the HAProxy Stats page.

In the following example, the rule rejects all requests, since it uses the TRUE ACL:

| frontend www | |

| bind :80 | |

| default_backend webservers | |

| tcp-request content reject if TRUE |

The reject policy closes the connection but returns no response. Here’s how it looks from the client’s perspective:

A connection reset page

#5 Shadowban

As you’ve seen, HAProxy has several ways to deny a request, with the option of sending back meaningful error messages or dropping the call without signaling back to the client. In some extreme cases, employing these countermeasures causes an attacker to simply become more stealthy. That’s a real challenge: By becoming more overt with your denials, the attacker learns to evade detection, and then you can no longer spot them.

Social media companies play this game of cat-and-mouse with attackers often, and shadowbanning has become an effective new weapon. The most well-known definition of shadowbanning is when a forum or social media platform hides your posts from others. To you, it looks like the service is working properly, but your influence on others is reduced or eliminated, usually without you ever knowing.

I’ll use the term shadowban in a broader sense to mean tricking an attacker into thinking that their assault is effective, while, in fact, we’ve secretly redirected them towards a dummy target. Consider this example: A bot is scraping content from your website in order to publish it on a competitor’s site. It crawls your site, finds all of your images, and downloads them. You could use the set-path response policy to dynamically change the URL path that they’re targeting before it reaches the backend web server.

| http-request set-path /images/cat.jpeg if { path_beg /images/ } is_attacker |

Here, a request for any file in the images directory, no matter what, causes the image cat.jpeg to be returned. This rule is triggered only if the client has been flagged as an attacker (i.e. those that crawl too many pages within a period), as indicated by the is_attacker ACL. This bot will no longer get all of your images.

The cat image

Another example is to redirect an attacker who is trying to brute force your login page. You can have HAProxy return to them a fake login page by using the return response policy, as shown in this example where we return the file fake_login.html:

| http-request return content-type text/html file /srv/www/fake_login.html if { path_beg /login } is_attacker |

You can make this page look identical to your normal login page and then use Javascript to intercept the form submissions and display a “Login failed” message.

A fake login page

By displaying a fake login page to the attacker, their efforts will never succeed, but you also stop them from knowing that they’ve been detected. That stops the cycle of escalation.

Related Article: Most Common Website Security Threats

HAProxy Enterprise response policies

HAProxy Enterprise adds a few more response policies to your arsenal, which are particularly good for weeding out bad bots without denying legitimate users who may have accidentally triggered a rule.

The Antibot module asks the client (i.e. the browser) to solve a Javascript challenge, which many bots are unable to do. Regular users will continue to be able to access your site.

You can also enable the reCAPTCHA module, which stops more sophisticated bots that can solve Javascript challenges. It presents a Google reCATPCHA v2 or v3 challenge that must be solved before the user can continue. These safeguards are ideal for defending against a range of attacks including website scraping, vulnerability scanning, and DDoS.

HAProxy response policies are built different

Many load balancers give you only basic response policies like deny, which can lead to the attacker learning to evade detection. HAProxy gives you an arsenal of sophisticated countermeasures that can be combined and used in an incremental fashion. Whether you want to instantly reject a connection, slow down the response, or redirect the client towards a dummy target without them knowing, HAProxy lets you do it. That flexibility is essential for fighting modern threats.

Want to stay up to date on similar topics? Subscribe to this blog! You can also follow us on Twitter and join the conversation on Slack.

HAProxy Enterprise is the industry-leading software load balancer. It powers modern application delivery at any scale and in any environment, providing the utmost performance, observability and security. Organizations harness its cutting edge features and enterprise suite of add-ons, backed by authoritative expert support and professional services. Ready to learn more? Contact us and sign up for a free trial.

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.

![Using HAProxy as an API Gateway, Part 5 [Monetization]](https://cdn.haproxy.com/img/containers/partner_integrations/api-gateway-monetization.png/f6ba7bc74399bb3dbeaec96f2813c2f7/api-gateway-monetization.png)