A paper on this topic was prepared for internal use within HAProxy last year, and this version is now being shared publicly. Given the critical role of SSL in securing internet communication and the challenges presented by evolving SSL technologies, reverse proxies like HAProxy must continuously adapt their SSL strategies to maintain performance and compatibility, ensuring a secure and efficient experience for users. We are committed to providing ongoing updates on these developments.

The SSL landscape has shifted dramatically in the past few years, introducing performance bottlenecks and compatibility challenges for developers. Once a reliable foundation, OpenSSL's evolution has prompted a critical reassessment of SSL strategies across the industry.

For years, OpenSSL maintained its position as the de facto standard SSL library, offering long-term stability and consistent performance. The arrival of version 3.0 in September 2021 changed everything. While designed to enhance security and modularity, the new architecture introduced significant performance regressions in multi-threaded environments, and deprecated essential APIs that many external projects relied upon. The absence of the anticipated QUIC API further complicated matters for developers who had invested in its implementation.

This transition posed a challenge for the entire ecosystem. OpenSSL 3.0 was designated as the Long-Term Support (LTS) version, while maintenance for the widely used 1.1.1 branch was discontinued. As a result, many Linux distributions had no practical choice but to adopt the new version despite its limitations. Users with performance-critical applications found themselves at a crossroads: continue with increasingly unsupported earlier versions or accept substantial penalties in performance and functionality.

Performance testing reveals the stark reality: in some multi-threaded configurations, OpenSSL 3.0 performs significantly worse than alternative SSL libraries, forcing organizations to provision more hardware just to maintain existing throughput. This raises important questions about performance, energy efficiency, and operational costs.

Examining alternatives—BoringSSL, LibreSSL, WolfSSL, and AWS-LC—reveals a landscape of trade-offs. Each offers different approaches to API compatibility, performance optimization, and QUIC support. For developers navigating the modern SSL ecosystem, understanding these trade-offs is crucial for optimizing performance, maintaining compatibility, and future-proofing their infrastructure.

Functional requirements

The functional aspects of SSL libraries determine their versatility and applicability across different software products. HAProxy’s SSL feature set was designed around the OpenSSL API, so compatibility or functionality parity is a key requirement.

Modern implementations must support a range of TLS protocol versions (from legacy TLS 1.0 to current TLS 1.3) to accommodate diverse client requirements while encouraging migration to more secure protocols.

Support for innovative, emerging protocols like QUIC plays a vital role in driving widespread adoption and technological breakthroughs.

Certificate management functionality, including chain validation, revocation checking via OCSP and CRLs, and SNI (Server Name Indication) support, is essential for proper deployment.

SSL libraries must offer comprehensive cipher suite options to meet varying security policies and compliance requirements such as PCI-DSS, HIPAA, and FIPS.

Standard features like ALPN (Application-Layer Protocol Negotiation) for HTTP/2 support, certificate transparency validation, and stapling capabilities further expand functional requirements.

Software products relying on these libraries must carefully evaluate which functional components are critical for their specific use cases while considering the overhead these features may introduce.

Performance considerations

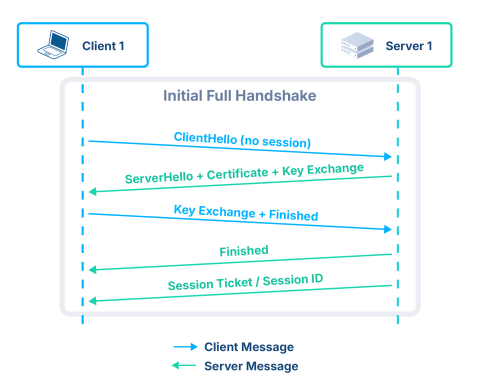

SSL/TLS operations are computationally intensive, creating significant performance challenges for software products that rely on these libraries. Handshake operations, which establish secure connections, require asymmetric cryptography that can consume substantial CPU resources, especially in high-volume environments. They also present environmental and logistical challenges alongside their computational demands.

The energy consumption of cryptographic operations directly impacts the carbon footprint of digital infrastructure relying on these security protocols. High-volume SSL handshakes and encryption workloads increase power requirements in data centers, contributing to greater electricity consumption and associated carbon emissions.

Performance of SSL libraries has become increasingly important as organizations pursue sustainability goals and green computing initiatives. Modern software products implement sophisticated core-awareness strategies that maximize single-node efficiency by distributing cryptographic workloads across all available CPU cores. This approach to processor saturation enables organizations to fully utilize existing hardware before scaling horizontally, significantly reducing both capital expenditure and energy consumption that would otherwise be required for additional servers.

By efficiently leveraging all available cores for SSL/TLS operations, a single properly configured node can often handle the same encrypted traffic volume as multiple poorly optimized servers, dramatically reducing datacenter footprint, cooling requirements, and power consumption.

These architectural improvements, when properly leveraged by SSL libraries, can deliver substantial performance improvements with minimal environmental impact—a critical consideration as encrypted traffic continues to grow exponentially across global networks.

Maintenance requirements

The maintenance burden of SSL implementations presents significant challenges for software products. Security vulnerabilities in SSL libraries require immediate attention, forcing development teams to establish robust patching processes.

Software products must balance the stability of established SSL libraries against the security improvements of newer versions; this process becomes more manageable when operating system vendors provide consistent and timely updates. Documentation and expertise requirements add further complexity, as configuring SSL properly demands specialized knowledge that may be scarce within development teams. Backward compatibility concerns often complicate maintenance, as updates must protect existing functionality while implementing necessary security improvements or fixes.

The complexity and risks associated with migrating to a new SSL library version often encourage product vendors to try to stick as long as possible to the same maintenance branch, preferably an LTS version provided by the operating system’s vendor.

Current SSL library ecosystem

OpenSSL

OpenSSL has served as the industry-standard SSL library included in most operating systems for many years. A key benefit has been its simultaneous support for multiple versions over extended periods, enabling users to carefully schedule upgrades, adapt their code to accommodate new versions, and thoroughly test them before implementation.

The introduction of OpenSSL 3.0 in September 2021 posed significant challenges to the stability of the SSL ecosystem, threatening its continued reliability and sustainability.

This version was released nearly a year behind schedule, thus shortening the available timeframe for migrating applications to the new version.

The migration process was challenging due to OpenSSL's API changes, such as the deprecation of many commonly used functions and the ENGINE API that external projects relied on. This affected solutions like the pkcs11 engine used for Hardware Security Modules (HSM) and Intel’s QAT engine for hardware crypto acceleration, forcing engines to be rewritten with the new providers API.

Performance was also measurably lower in multi-threaded environments, making OpenSSL 3.0 unusable in many performance-dependent use cases.

OpenSSL also decided that the long-awaited QUIC API would finally not be merged, dealing a significant blow to innovators and early adopters of this technology. Developers and organizations were left without the key QUIC capabilities they had been counting on for their projects.

OpenSSL labeled version 3.0 as an LTS branch and shortly thereafter discontinued maintenance of the previous 1.1.1 LTS branch. This decision left many Linux distributions with no viable alternatives, compelling them to adopt the new version.

Users with performance-critical requirements faced limited options: either remain on older distributions that still maintained their own version 1.1.1 implementations, deploy more servers to compensate for the performance loss, or purchase expensive extended premium support contracts and maintain their own packages.

BoringSSL

BoringSSL is a fork of OpenSSL that was announced in 2014, after the heartbleed CVE. This library was initially meant for Google; projects that use it must follow the "live at HEAD" model. This can lead to maintenance challenges, since the API breaks frequently and no maintenance branches are provided.

However, it stands out in the SSL ecosystem for its willingness to implement bleeding-edge features. For example, it was the first OpenSSL-based library to implement the QUIC API, which other such libraries later adopted.

This library has been supported in the HAProxy community for some time now and has provided the opportunity to progress on the QUIC subject. While it was later abandoned because of its incompatibility with the HAProxy LTS model, we continue to keep an eye on it because it often produces valuable innovations.

LibreSSL

LibreSSL is a fork of OpenSSL 1.0.1 that also emerged after the heartbleed vulnerability, with the aim to be a more secure alternative to OpenSSL. It started with a massive cleanup of the OpenSSL code, removing a lot of legacy and infrequently used code in the OpenSSL API.

LibreSSL later provided the libtls API, a completely new API designed as a simpler and more secure alternative to the libssl API. However, since it's an entirely different API, applications require significant modifications to adopt it.

LibreSSL aims for a more secure SSL and tends to be less performant than other libraries. As such, features considered potentially insecure are not implemented, for example, 0-RTT. Nowadays, the project focuses on evolving its libssl API with some inspiration from BoringSSL; for example, the EVP_AEAD and QUIC APIs.

LibreSSL was ported to other operating systems in the form of the libressl-portable project. Unfortunately, it is rarely packaged in Linux distributions, and is typically used in BSD environments.

HAProxy does support LibreSSL—it is currently built and tested by our continuous integration (CI) pipeline—however, not all features are supported. LibreSSL implemented the BoringSSL QUIC API in 2022, and the HAProxy team successfully ported HAProxy to it with libressl 3.6.0. Unfortunately, LibreSSL does not implement all the API features needed to use HAProxy to its full potential.

WolfSSL

WolfSSL is a TLS library which initially targeted the embedded world. This stack is not a fork of OpenSSL but offers a compatibility layer, making it simpler to port applications.

Back in 2012, we tested its predecessor, cyaSSL. It had relatively good performance but lacked too many features to be considered for use. Since that time, the library has evolved with the addition of many consequential features (TLS 1.3, QUIC, etc.) while still keeping its lightweight approach and even providing a FIPS-certified cryptographic module.

In 2022, we started a port of HAProxy to WolfSSL with the help of the WolfSSL team. There were bugs and missing features in the OpenSSL compatibility layer, but as of WolfSSL 5.6.6, it became a viable option for simple setups or embedded systems. It was successfully ported to the HAProxy CI and, as such, is regularly built and tested with up-to-date WolfSSL versions.

Since WolfSSL is not OpenSSL-based at all, some behavior could change, and not all features are supported. HAProxy SSL features were designed around the OpenSSL API; this was the first port of HAProxy to an SSL library not based on the OpenSSL API, which makes it difficult to perfectly map existing features. As a result, some features occasionally require minor configuration adaptations.

We've been working with the WolfSSL team to ensure their library can be seamlessly integrated with HAProxy in mainstream Linux distributions, though this integration is still under development (https://github.com/wolfSSL/wolfssl/issues/6834).

WolfSSL is available in Ubuntu and Debian, but unfortunately, specific build options that are needed for HAProxy and CPU optimization are not activated by default. As a result, it needs to be installed and maintained manually, which can be bothersome.

AWS-LC

AWS-LC is a BoringSSL (and by extension OpenSSL) fork that started in 2019. It is intended for AWS and its customers. AWS-LC targets security and performance (particularly on AWS hardware). Unlike BoringSSL, it aims for a backward-compatible API, making it easy to maintain.

We were recently approached by the AWS team, who provided us with patches to make HAProxy compatible with AWS-LC, enabling us to test them together regularly via CI. Since HAProxy was ported to BoringSSL in the past, we inherited a lot of features that were already working with it.

AWS-LC supports modern TLS features and QUIC. In HAProxy, it supports the same features as OpenSSL 1.1.1, but it lacks some older ciphers which are not used anymore (CCM, DHE). It also lacks the engine support that was already removed in BoringSSL.

It does provide a FIPS-certified cryptographic module, which is periodically submitted for FIPS validation.

Other libraries

Mbedtls, GnuTLS, and other libraries have also been considered; however, they would require extensive rewriting of the HAProxy SSL code. We didn't port HAProxy to these libraries because the available feature sets did not justify the amount of up-front work and maintenance effort required.

We also tested Rustls and its rustls-openssl-compat layer. Rustls could be an interesting library in the future, but the OpenSSL compatibility application binary interface (ABI) was not complete enough to make it work correctly with HAProxy in its current state. Using the native Rustls API would again require extensive rewriting of HAProxy code.

We also routinely used QuicTLS (openssl+quic) during our QUIC development. However, it does not diverge enough from OpenSSL to be considered a different library, as it is really distributed as a patchset applied on top of OpenSSL.

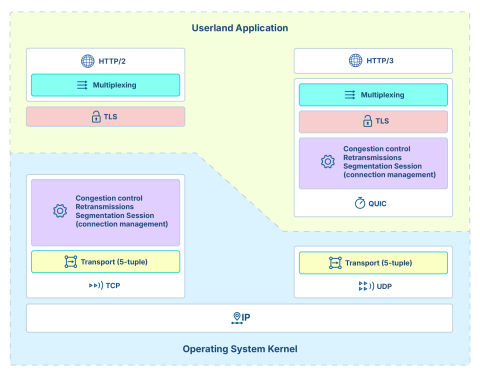

An introduction to QUIC and how it relates to SSL libraries

QUIC is an encrypted, multiplexed transport protocol that is mainly used to transport HTTP/3. It combines some of the benefits of TCP, TLS, and HTTP/2, without many of their drawbacks. It started as research work at Google in 2012 and was deployed at scale in combination with the Chrome browser in 2014. In 2015, the IETF QUIC working group was created to standardize the protocol, and published the first draft (draft-ietf-quic-transport-00) on Nov 28th, 2016. In 2020, the new IETF QUIC protocol differed quite a bit from the original one and started to be widely adopted by browsers and some large hosting providers. Finally, the protocol was published as RFC9000 in 2021.

One of the key goals of the protocol is to move the congestion control to userland so that application developers can experiment with new algorithms, without having to wait for operating systems to implement and deploy them. It integrates cryptography at its heart, contrary to classical TLS, which is only an additional layer on top of TCP.

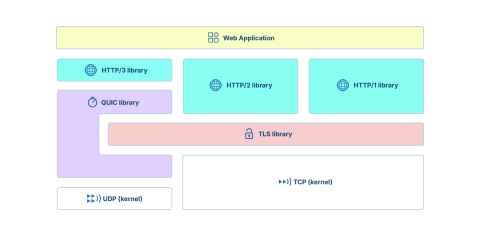

A full-stack web application relies on these key components:

HTTP/1, HTTP/2, HTTP/3 implementations (in-house or libraries)

A QUIC implementation (in-house or library)

A TLS library shared between these 3 protocol implementations

The rest (below) is the regular UDP/TCP kernel sockets

Overall, this integrates pretty well, and various QUIC implementations started very early, in order to validate some of the new protocol’s concepts and provide feedback to help them evolve. Some implementations are specific to a single project, such as HAProxy’s QUIC implementation, while others, such as ngtcp2, are made to be portable and easy to adopt by common applications.

During all this work, the need for new TLS APIs was identified in order to permit a QUIC implementation to access some essential elements conveyed in TLS records, and the required changes were introduced in BoringSSL (Google’s fork of OpenSSL). This has been the only TLS library usable by QUIC implementations for both clients and servers for a long time. One of the difficulties with working with BoringSSL is that it evolves quickly and is not necessarily suitable for products maintained for a long period of time, because new versions regularly break the build, due to changes in BoringSSL's public API.

In February 2020, Todd Short opened a pull request (PR) on OpenSSL’s GitHub repository to propose a BoringSSL-compatible implementation of the QUIC API in OpenSSL. The additional code adds a few callbacks at some key points, allowing existing QUIC implementations such as MsQuic, ngtcp2, HAProxy, and others to support OpenSSL in addition to BoringSSL. It was extremely well-received by the community. However, the OpenSSL team preferred to keep that work on hold until OpenSSL 3.0 was released; they did not reconsider this choice later, even though the schedule was drifting. During this time, developers from Akamai and Microsoft created QuicTLS. This new project essentially took the latest stable versions of OpenSSL and applied the patchset on top of it. QuicTLS soon became the de facto standard TLS library for QUIC implementations that were patiently waiting for OpenSSL 3.0 to be released and for this PR to get merged.

Finally, three years later, the OpenSSL team announced that they were not going to integrate that work and instead would create a whole new QUIC implementation from scratch. This was not what users needed or asked for and threw away years of proven work from the QUIC community. This shocking move provoked a strong reaction from the community, who had invested a lot of effort in OpenSSL via QuicTLS, but were left to find another solution: either the fast-moving BoringSSL or a more officially maintained variant of QuicTLS.

In parallel, other libs including WolfSSL, LibreSSL, and AWS-LC adopted the de facto standard BoringSSL QUIC API.

Finally, OpenSSL continues to mention QUIC in their projects, though their current focus seems to be to deliver a single-stream-capable minimum viable product (MVP) that should be sufficient for the command-line "s_client" tool. However, this approach still doesn’t offer the API that QUIC implementations have been waiting for over the last four years, forcing them to turn to QuicTLS.

The development of a transport layer like QUIC requires a totally different skillset than cryptographic library development. Such development work must be done with full transparency. The development team has degraded their project’s quality, failed to address ongoing issues, and consistently dismissed widespread community requests for even minor improvements. Validating these concerns, Curl contributor Stefan Eissing recently tried to make use of OpenSSL’s QUIC implementation with Curl and published his findings.They’re clearly not appealing, as most developers concerned about this topic would have expected.

In despair at this situation, we at HAProxy tried to figure out from the QUIC patch set if there could be a way to hack around OpenSSL without patching it, and we were clearly not alone. Roman Arutyunyan from NGINX core team were the first to propose a solution with a clever method that abuses the keylog callback to make it possible to extract or inject the required elements, and finally make it possible to have a minimal server-mode QUIC support. We adopted it as well, so users could start to familiarize themselves with QUIC and its impacts on their infrastructure, even though it does have some technical limitations (e.g., 0-RTT is not supported). This solution is only for servers, however; this hack may not work for clients (though this works for HAProxy, since QUIC is only implemented at the frontend at the moment).

With all that in mind, the possible choices of TLS libraries for QUIC implementations in projects designed around OpenSSL are currently quite limited:

QuicTLS: closest to OpenSSL, the most likely to work well as a replacement for OpenSSL, but now suffers from OpenSSL 3+ unsolved technical problems (more on that below), since QuicTLS is rebased on top of OpenSSL

AWS-LC: fairly complete, maintained, frequent releases, pretty fast, but no dedicated LTS branch for now

WolfSSL: less complete, more adaptable, very fast, also offers support contracts, so LTS is probably negotiable

LibreSSL: comes with OpenBSD by default, lacks some features and optimisations compared to OpenSSL, but works out of the box for small sites

NGINX’s hack: servers only, works out of the box with OpenSSL (no TLS rebuild needed), but has a few limitations, and will also suffer from OpenSSL 3+ unsolved technical problems

BoringSSL: where it all comes from, but moves too fast for many projects

This unfortunate situation considerably hurts QUIC protocol adoption. It even makes it difficult to develop or build test tools to monitor a QUIC server. From an industry perspective, it looks like either WolfSSL or AWS-LC needs to offer LTS versions of their products to potentially move into a market-leading position. This would potentially obsolete OpenSSL and eliminate the need for the QuicTLS effort.

Performance issues

In SSL, performance is the most critical aspect. There are indeed very expensive operations performed at the beginning of a connection before the communication can happen. If connections are closed fast (service reloads, scale up/down, switch-over, peak connection hours, attacks, etc.), it is very easy for a server to be overwhelmed and stop responding, which in turn can make visitors try again and add even more traffic. This explains why SSL frontend gateways tend to be very powerful systems with lots of CPU cores that are able to handle traffic surges without degrading service quality.

During performance testing performed in collaboration with Intel, which led to optimizations reflected in this document, we encountered an unexpected bottleneck. We found ourselves stuck with the “h1load” generator unable to produce more than 400 connections per second on a 48-core machine. After extensive troubleshooting, traces showed that threads were waiting for each other inside the libcrypto component (part of the OpenSSL library). The load generators were set up on Ubuntu 22.04, which comes with OpenSSL 3.0.2. Rebuilding OpenSSL 1.1.1 and linking against it instantly solved the problem, unlocking 140,000 connections per second. Several team members involved in the tests got trapped linking tools against OpenSSL 3.0, eventually realizing that this version was fundamentally unsuitable for client-based performance testing purposes.

The performance problems we encountered were part of a much broader pattern. Numerous users reported performance degradation with OpenSSL 3; there is even a meta-issue created to try to centralize information about this massive performance regression that affects many areas of the library (https://github.com/OpenSSL/OpenSSL/issues/17627). Among them, there were reports about nodejs’ performance being divided by seven when used as a client, other tools showing a 20x processing time increase, a 30x CPU increase on threaded applications that was similar to the load generator problem, and numerous others.

Despite the huge frustration caused by the QUIC API rejection, we were still eager to try to help OpenSSL spot and address the massive performance regression. We’ve participated with others to try to explain to the OpenSSL team the root cause of the problem, providing detailed measurements, graphs, and lock counts, such as here. OpenSSL responded by saying “we’re not going to reimplement locking callbacks because embedded systems are no longer the target” (when speaking about an Intel Xeon with 32GB RAM), and even suggested that pull requests fixing the problems are welcome, as if it was trivial for a third party to fix the issues that had caused the performance degradation.

The disconnect between user experience and developer perspective was highlighted in recent discussions, further exemplified by the complete absence of a culture of performance testing. This lack of performance testing was glaringly evident when a developer, after asking users to test their patches, admitted to not conducting testing themselves due to a lack of hardware. It was then suggested that the project should just publicly call for hardware access (and this was apparently resolved within a week or two), and by this time, the performance testing of proposed patches was finally conducted by participants outside of the project, namely from Akamai, HAProxy, and Microsoft.

When some of the project members considered a 32% performance regression “pretty near” the original performance, it signaled to our development team that any meaningful improvement was unlikely. The lack of hardware for testing indicates that the project is unwilling or unable to direct sufficient resources to address the problems, and the only meaningful metric probably is the number of open issues. Nowadays, projects using OpenSSL are starting to lose faith and are adding options to link against alternative libraries, since the situation has stagnated over the last three years – a trend that aligns with our own experience and observations.

Deep dive into the exact problem

Prior to OpenSSL 1.1.0, OpenSSL relied on a simple and efficient locking API. Applications using threads would simply initialize the OpenSSL API and pass a few pointers to the functions to be used for locking and unlocking. This had the merit of being compatible with whatever threading model an application uses. With OpenSSL 1.1.0, this function is ignored, and OpenSSL exclusively relies on the locks offered by the standard Pthread library, which can already be significantly heavier than what an application used to rely on.

At that time, while locks were implemented in many places, they were rarely used in exclusive mode, and not on the most common code paths. For example, we noticed heavy usage when using crypto engines, to the point of being the main bottleneck; quite a bit on session resume and cache access, but less on the rest of the code paths.

During our tests of the Intel QAT engine two years ago, we already noticed that OpenSSL 1.1.1 could make an immoderate use of locking in the engine API, causing extreme contention past 16 threads. This was tolerable, considering that engines were an edge case that was probably harder to test and optimize than the rest of the code. By seeing that these were just pthread_rwlocks and that we already had a lighter implementation of read-write locks, we had the idea to provide our own pthread_rwlock functions relying on our low-overhead locks (“lorw”), so that the OpenSSL library would use those instead of the legacy pthread_rwlocks. This proved extremely effective at pushing the contention point much higher. Thanks to this improvement, the code was eventually merged, and a build-time option was added to enable this alternate locking mechanism: USE_PTHREAD_EMULATION. We’ll see further that this option will be exploited again in order to measure what can be attributed to locking only.

With OpenSSL 3.0, an important goal was apparently to make the library much more dynamic, with a lot of previously constant elements (e.g., algorithm identifiers, etc.) becoming dynamic and having to be looked up in a list instead of being fixed at compile-time. Since the new design allows anyone to update that list at runtime, locks were placed everywhere when accessing the list to ensure consistency. These lists are apparently scanned to find very basic configuration elements, so this operation is performed a lot. In one of the measurements provided to the team and linked to above, it was shown that the number of read locks (non-exclusive) jumped 5x compared with OpenSSL 1.1.1 just for the server mode, which is the least affected one. The measurement couldn’t be done in client mode since it just didn’t work at all; timeouts and watchdog were hitting every few seconds.

As you’ll see below, just changing the locking mechanism reveals pretty visible performance gains, proving that locking abuse is the main cause of the performance degradation that affects OpenSSL 3.0.

OpenSSL 3.1 tried to partially address the problem by placing a few atomic operations instead of locks where it appeared possible. The problem remains that the architecture was probably designed to be way more dynamic than necessary, making it unfit for performance-critical workloads, and this was clearly visible in the performance reports of the issues above.

There are two remaining issues at the moment:

After everything imaginable was done, the performance of OpenSSL 3.x remains highly inferior to that of OpenSSL 1.1.1. The ratio is hard to predict, as it depends heavily on the workload, but losses from 10% to 99% were reported.

In a rush to get rid of OpenSSL 1.1.1, the OpenSSL team declared its end of life before 3.0 was released, then postponed the release of 3.0 by more than a year without adjusting 1.1.1’s end of life date. When 3.0 was finally emitted, 1.1.1 had little remaining time to live, so they had to declare 3.0 “long term supported”. This means that this shiny new version, with a completely new architecture that had not been sufficiently tested yet, would become the one provided by various operating systems for several years, since they all need multiple years of support. It turns out that this version proved to be dramatically worse in terms of performance and reliability than any other version ever released.

End users are facing a dead end:

Operating systems now ship with 3.0, which is literally unusable for certain users.

Distributions that were shipping 1.1.1 are progressively reaching end of support (except those providing extended support, but few people use these distributions, and they’re often paid).

OpenSSL 1.1.1 is no longer supported for free by the OpenSSL team, so many users cannot safely use it.

These issues sparked significant concern within the HAProxy community, fundamentally shifting their priorities. While they had initially been focused on forward-looking questions such as, "which library should we use to implement QUIC?", they were now forced to grapple with a more basic survival concern: "which SSL library will allow our websites to simply stay operational?" The performance problems were so severe that basic functionality, rather than new feature support, had become the primary consideration.

Performance testing results

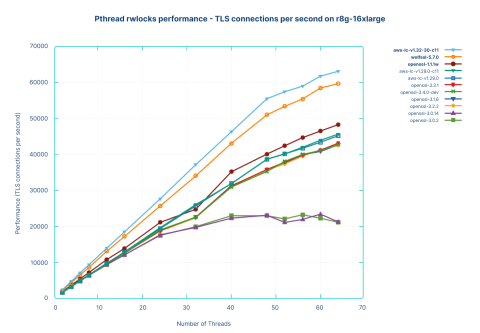

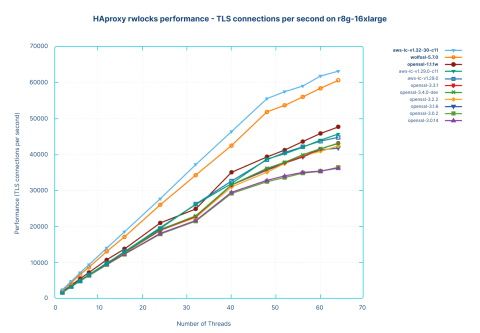

HAProxy already supported alternative libraries, but the support was mostly incomplete due to API differences. The new performance problem described above forced us to speed up the full adoption of alternatives. At the moment, HAProxy supports multiple SSL libraries in addition to OpenSSL: QuicTLS, LibreSSL, WolfSSL, and AWS-LC. QuicTLS is not included in the testing since it is simply OpenSSL plus the QUIC patches, which do not impact performance. LibreSSL is not included in the tests because its focus is primarily on code correctness and auditability, and we already noticed some significant performance losses there - probably related to the removal of certain assembler implementations of algorithms and simplifications of certain features.

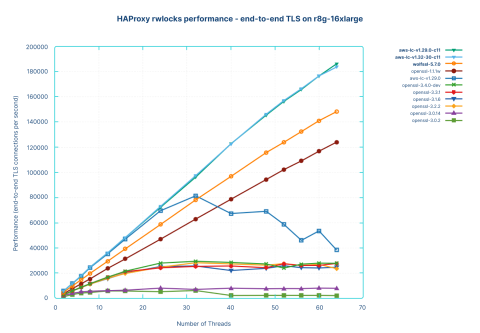

We included various versions of OpenSSL from 1.1.1 to the latest 3.4-dev (at the time), in order to measure the performance loss of 3.x compared with 1.1.1 and identify any progress made by the OpenSSL team to fix the regression. OpenSSL version 3.0.2 was specifically mentioned because it is shipped in Ubuntu 22.04, where most users face the problem after upgrading from Ubuntu 20.04, which ships the venerable OpenSSL 1.1.1. The HAProxy version used for testing was: HAProxy version 3.1-dev1-ad946a-33 2024/06/26

Testing scenarios:

Server-only mode with full TLS handshake: This is the most critical and common use for internet-facing web equipment (servers and load balancers), because it requires extremely expensive asymmetric cryptographic operations. The performance impact is especially concerning because it is the absolute worst case, and a new handshake can be imposed by the client at any time. For this reason, it is also often an easy target for denial of service attacks.

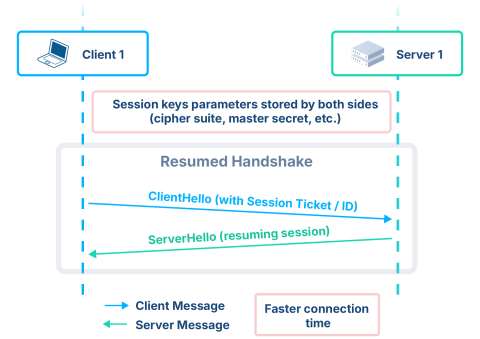

End-to-end encryption with TLS resumption: The resumption approach is the most common on the backend to reach the origin servers. Security is especially important in today’s virtualized environments, where network paths are unclear. Since we don’t want to inflict a high load on the server, TLS sessions are resumed on new TCP connections. We’re just doing the same on the frontend to match the common case for most sites.

Testing variants:

Two locking options (standard Pthread locking and HAProxy’s low-overhead locks)

Multiple SSL libraries and versions

Testing environment:

All tests will be running on AWS r8g.16xlarge instance, running 64 Graviton4 cores (ARM Neoverse V2)

Server only mode with Full TLS Handshake

In this test, clients will:

Connect to the server (HAProxy in this case)

Perform a single HTTP request

Close the connection

In this simplified scenario, to simulate the most ideal conditions, backend servers are not involved because they have a negligible impact, and HAProxy can directly respond to client requests. When they reconnect, they never try to resume an existing session, and instead always perform a new connection. Using RSA, this use case is very inexpensive for the clients and very expensive for the server. This use case represents a surge of new visitors (which causes a key exchange); for example, a site that suddenly becomes popular after an event (e.g., news sites). In such tests, a ratio of 1:10 to 1:15 in terms of performance between the client and the server is usually sufficient to saturate the server. Here, the server has 64 cores, but we’ll keep a 32-core client, which will be largely enough.

The performance of the machine running the different libraries is measured in number of new connections per second. It was always verified that the machine saturates its CPU. The first test is with the regular build of HAProxy against the libraries (i.e., HAProxy doesn’t emulate the pthread locks, but lets the libraries use them):

Two libraries stand out at the top and the bottom. At the top, above 63000 connections per second, in light blue, we’re seeing the latest version of AWS-LC (30 commits after v1.32.0), which includes important CPU-level optimizations for RSA calculations. Previous versions did not yield such results due to a mistake in the code that failed to properly detect the processor and enable the appropriate optimizations. The second fastest library, in orange, was WolfSSL 5.7.0. For a long time, we’ve known this library for being heavily optimized to run fast on modest hardware, so we’re not surprised and even pleased to see it in the top on such a powerful machine.

In the middle, around 48000 connections per second, or 25% lower, are OpenSSL 1.1.1 and the previous version of AWS-LC (~45k), version 1.29.0. Below those two, around 42500 connections per second, are the latest versions of OpenSSL (3.1, 3.2, 3.3 and 3.4-dev). At the bottom, around 21000 connections per second, are both OpenSSL 3.0.2 and 3.0.14, the latest 3.0 version at the time of testing.

What is particularly visible on this graph is that aside from the two versions that specifically optimize for this processor, all other libraries remained grouped until around 12-16 threads. After that point, the libraries start to diverge, with the two flavors of OpenSSL 3.0 staying at the bottom and reaching their maximum performance and plateau around 32 threads. Thus, this is not a cryptography optimization issue; it's a scalability issue.

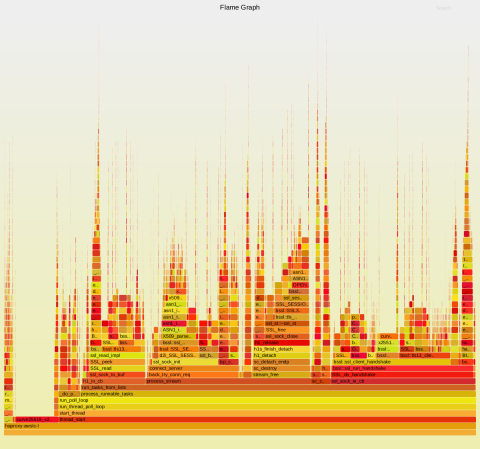

When comparing the profiling output of OpenSSL 1.1.1 and 3.0.14 for this test, the difference is obvious.

OpenSSL 1.1.1w:

| 46.29% libcrypto.so.1.1 [.] __bn_sqr8x_mont | |

| 14.73% libcrypto.so.1.1 [.] __bn_mul4x_mont | |

| 13.01% libcrypto.so.1.1 [.] MOD_EXP_CTIME_COPY_FROM_PREBUF | |

| 2.05% libcrypto.so.1.1 [.] __ecp_nistz256_mul_mont | |

| 1.06% libcrypto.so.1.1 [.] sha512_block_armv8 | |

| 0.95% libcrypto.so.1.1 [.] __ecp_nistz256_sqr_mont | |

| 0.61% libcrypto.so.1.1 [.] ecp_nistz256_point_double | |

| 0.54% libcrypto.so.1.1 [.] bn_mul_mont_fixed_top | |

| 0.52% [kernel] [k] default_idle_call | |

| 0.51% libc.so.6 [.] malloc | |

| 0.51% libc.so.6 [.] _int_free | |

| 0.50% libcrypto.so.1.1 [.] BN_mod_exp_mont_consttime | |

| 0.49% libcrypto.so.1.1 [.] ecp_nistz256_sqr_mont | |

| 0.46% libc.so.6 [.] _int_malloc | |

| 0.43% libcrypto.so.1.1 [.] OPENSSL_cleanse |

OpenSSL 3.0.14:

| 19.12% libcrypto.so.3 [.] __bn_sqr8x_mont | |

| 17.33% libc.so.6 [.] __aarch64_ldadd4_acq | |

| 15.14% libc.so.6 [.] pthread_rwlock_unlock@@GLIBC_2.34 | |

| 12.48% libc.so.6 [.] pthread_rwlock_rdlock@@GLIBC_2.34 | |

| 8.55% libc.so.6 [.] __aarch64_cas4_rel | |

| 6.04% libcrypto.so.3 [.] __bn_mul4x_mont | |

| 5.39% libcrypto.so.3 [.] MOD_EXP_CTIME_COPY_FROM_PREBUF | |

| 1.59% libcrypto.so.3 [.] __ecp_nistz256_mul_mont | |

| 0.80% libcrypto.so.3 [.] __aarch64_ldadd4_relax | |

| 0.74% libcrypto.so.3 [.] __ecp_nistz256_sqr_mont | |

| 0.53% libcrypto.so.3 [.] __aarch64_ldadd8_relax | |

| 0.50% libcrypto.so.3 [.] ecp_nistz256_point_double | |

| 0.43% libcrypto.so.3 [.] sha512_block_armv8 | |

| 0.30% libcrypto.so.3 [.] ecp_nistz256_sqr_mont | |

| 0.24% libc.so.6 [.] malloc | |

| 0.23% libcrypto.so.3 [.] bn_mul_mont_fixed_top | |

| 0.23% libc.so.6 [.] _int_free |

OpenSSL 3.0.14 spends 27% of the time acquiring and releasing read locks, something that should definitely not be needed during key exchange operations, to which we can add 26% in atomic operations, which is precisely 53% of the CPU spent doing non-useful things.

Let’s examine how much performance can be recovered by building with USE_PTHREAD_EMULATION=1. (The libraries will use HAProxy’s low-overhead locks instead of Pthread locks.)

The results show that the performance remains exactly the same for all libraries, except OpenSSL 3.0, which significantly increased to reach around 36000 connections per second. The profile now looks like this:

OpenSSL 3.0.14:

| 33.03% libcrypto.so.3 [.] __bn_sqr8x_mont | |

| 10.63% haproxy-openssl-3.0.14-emu [.] pthread_rwlock_wrlock | |

| 10.34% libcrypto.so.3 [.] __bn_mul4x_mont | |

| 9.27% libcrypto.so.3 [.] MOD_EXP_CTIME_COPY_FROM_PREBUF | |

| 5.63% haproxy-openssl-3.0.14-emu [.] pthread_rwlock_rdlock | |

| 3.15% haproxy-openssl-3.0.14-emu [.] pthread_rwlock_unlock | |

| 2.75% libcrypto.so.3 [.] __ecp_nistz256_mul_mont | |

| 2.19% libcrypto.so.3 [.] __aarch64_ldadd4_relax | |

| 1.26% libcrypto.so.3 [.] __ecp_nistz256_sqr_mont | |

| 1.10% libcrypto.so.3 [.] __aarch64_ldadd8_relax | |

| 0.87% libcrypto.so.3 [.] ecp_nistz256_point_double | |

| 0.72% libcrypto.so.3 [.] sha512_block_armv8 | |

| 0.50% libcrypto.so.3 [.] ecp_nistz256_sqr_mont | |

| 0.42% libc.so.6 [.] malloc | |

| 0.41% libc.so.6 [.] _int_free |

The locks used were the only difference between the two tests. The amount of time spent in locks noticeably diminished, but not enough to explain that big a difference. However, it’s worth noting that pthread_rwlock_wrlock made its appearance, as it wasn’t visible in the previous profile. It’s likely that, upon contention, the original function immediately went to sleep in the kernel, explaining why its waiting time was not accounted for (perf top measures CPU time).

End-to-end encryption with TLS resumption

The next test concerns the most optimal case, that is, when the proxy has the ability to resume a TLS session from the client’s ticket, and then uses session resumption as well to connect to the backend server. In this mode, asymmetric cryptography is used only once per client and once per server for the time it takes to get a session ticket, and everything else happens using lighter cryptography.

This scenario represents the most common use case for applications hosted on public cloud infrastructures: clients connected all day to an application don't do it over the same TCP connection; connections are transparently closed when not used for a while, and reopened on activity, with the TLS session resumed. As a result, the cost of the initial asymmetric cryptography becomes negligible when amortized over numerous requests and connections. In addition, since this is a public cloud, encryption between the proxy and the backend servers is mandatory, so there’s really SSL on both sides.

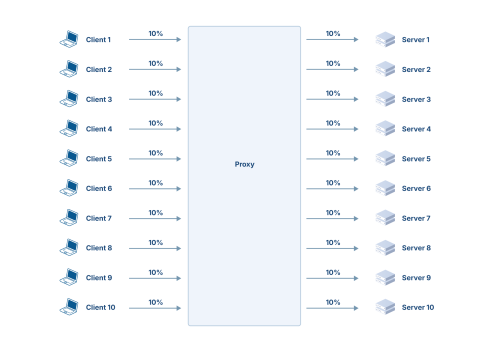

Given that performance is going to be much higher, a single client and a single server are no longer sufficient for the benchmark. Thus, we’ll need 10 clients and 10 servers per proxy, each taking 10% of the total load, which gives the following theoretical setup:

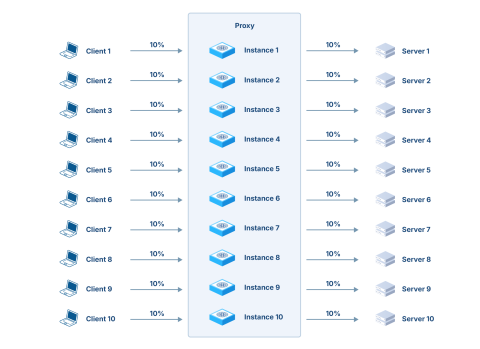

We can simplify the configuration by having 10 distinct instances of the proxy within the same process (i.e., 10 ports, one per client -> server association):

Since the connections with the client and server are using the exact same protocols and behavior (http/1.1, close, resume), we can daisy-chain each instance to the next one and keep only client 1 and server 10:

With this setup, only a single client and a single server are needed, each seeing 10% of the load, with the proxy having to deal 10 times with these 10%, hence seeing 100% of the load.

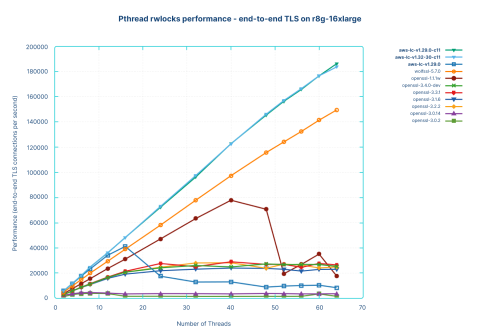

The first test was run against the regular HAProxy version, keeping the default locks. The performance is measured in end-to-end connections per second; that is, one connection accepted from the client and one connection emitted to the server count together as one end-to-end connection.

Let’s ignore the highest two curves for now. The orange curve is again WolfSSL, showing an excellent linear scalability until 64 cores, where it reaches 150000 end-to-end connections per second, where the performance was only limited by the number of available CPU cores. This also demonstrates HAProxy’s modern scalability, showcasing that it can deliver linear performance scaling within a single process as the number of cores increases.

The brown curve below it is OpenSSL 1.1.1w; this used to scale quite well with rekeying, but when resuming and connecting to a server, the scalability disappears and performance degrades at 40 threads. Performance then collapses to the equivalent of 8 threads when reaching 64 threads, at 17800 connections per second. The performance profiling clearly reveals the cause: locking and atomics alone are wasting around 80% of the CPU cycles.

OpenSSL 1.1.1w:

| 72.83% libc.so.6 [.] pthread_rwlock_wrlock@@GLIBC_2.34 | |

| 1.99% libc.so.6 [.] __aarch64_cas4_acq | |

| 1.47% libcrypto.so.1.1 [.] fe51_mul | |

| 1.30% libc.so.6 [.] __aarch64_cas4_relax | |

| 1.24% libcrypto.so.1.1 [.] fe_mul | |

| 1.00% libc.so.6 [.] __aarch64_ldset4_acq | |

| 0.86% libcrypto.so.1.1 [.] sha512_block_armv8 | |

| 0.77% [kernel] [k] futex_q_lock | |

| 0.70% [kernel] [k] queued_spin_lock_slowpath | |

| 0.70% libcrypto.so.1.1 [.] fe51_sq | |

| 0.68% libcrypto.so.1.1 [.] x25519_scalar_mult | |

| 0.56% libc.so.6 [.] pthread_rwlock_unlock@@GLIBC_2.34 |

The worst-performing libraries, the flat curves at the bottom, are once again OpenSSL 3.0.2 and 3.0.14, respectively. They both fail to scale past 2 threads; 3.0.2 even collapses at 16 threads, reaching performance levels that are indistinguishable from the X axis, and showing 1500-1600 connections per second at 16 threads and beyond, equivalent to just 1% of WolfSSL! OpenSSL 3.0.14 is marginally better, culminating at 3700 connections per second, or 2.5% of WolfSSL. In blunt terms: running OpenSSL 3.0.2 as shipped with Ubuntu 22.04 results in 1/100 of WolfSSL’s performance on identical hardware! To put this into perspective, you would have to deploy 100 times the number of machines to handle the same traffic, solely because of the underlying SSL library.

It’s also visible that a 32-core system running optimally at 63000 connections per second on OpenSSL 1.1.1 would collapse to only 1500 connections per second on OpenSSL 3.0.2, or 1/42 of its performance, for example, after upgrading from Ubuntu 20.04 to 22.04. This is exactly what many of our users are experiencing at the moment. It is also understandable that upgrading to the more recent Ubuntu 24.04 only addresses a tiny part of the problem, by only roughly doubling the performance with OpenSSL 3.0.14.

Here is a performance profile of the process running on OpenSSL 3.0.2:

| 14.52% [kernel] [k] default_idle_call | |

| 14.15% libc.so.6 [.] __aarch64_ldadd4_acq | |

| 9.87% libc.so.6 [.] pthread_rwlock_unlock@@GLIBC_2.34 | |

| 7.32% libcrypto.so.3 [.] ossl_sa_doall_arg | |

| 7.28% libc.so.6 [.] pthread_rwlock_rdlock@@GLIBC_2.34 | |

| 6.23% [kernel] [k] arch_local_irq_enable | |

| 3.35% libcrypto.so.3 [.] __aarch64_ldadd8_relax | |

| 2.80% libc.so.6 [.] __aarch64_cas4_rel | |

| 2.04% [kernel] [k] arch_local_irq_restore | |

| 1.32% libcrypto.so.3 [.] OPENSSL_LH_doall_arg | |

| 1.11% libcrypto.so.3 [.] __aarch64_ldadd4_relax | |

| 0.87% [kernel] [k] futex_q_lock | |

| 0.84% libcrypto.so.3 [.] fe51_mul | |

| 0.82% [kernel] [k] el0_svc_common.constprop.0 | |

| 0.74% libcrypto.so.3 [.] fe_mul | |

| 0.65% libcrypto.so.3 [.] OPENSSL_LH_flush | |

| 0.64% libcrypto.so.3 [.] OPENSSL_LH_doall | |

| 0.62% [kernel] [k] futex_wake | |

| 0.58% libc.so.6 [.] _int_malloc | |

| 0.57% [kernel] [k] wake_q_add_safe | |

| 0.53% libcrypto.so.3 [.] sha512_block_armv8 |

What is visible here is that all the CPU is wasted in locks and atomic operations and wake-up/sleep cycles, explaining why the CPU cannot go higher than 350-400%. The machine seems to be waiting for something while the locks are sleeping, causing all the work to be extremely serialized.

Another concerning curve is AWS-LC, the blue one near the bottom. It shows significantly higher performance than other libraries for a few threads, and then suddenly collapses when the number of cores increases. The profile reveals that this is definitely a locking issue, and it is confirmed by perf top:

AWS-LC 1.29.0:

| 86.01% libc.so.6 [.] pthread_rwlock_wrlock@@GLIBC_2.34 | |

| 2.43% libc.so.6 [.] __aarch64_cas4_relax | |

| 1.78% libc.so.6 [.] __aarch64_cas4_acq | |

| 1.13% [kernel] [k] futex_q_lock | |

| 1.09% libc.so.6 [.] __aarch64_ldset4_acq | |

| 0.82% libc.so.6 [.] __aarch64_swp4_relax | |

| 0.76% [kernel] [k] queued_spin_lock_slowpath | |

| 0.65% haproxy-aws-lc-v1.29.0-std [.] curve25519_x25519_byte_scalarloop | |

| 0.25% [kernel] [k] futex_get_value_locked | |

| 0.23% haproxy-aws-lc-v1.29.0-std [.] curve25519_x25519base_byte_scalarloop | |

| 0.15% libc.so.6 [.] __aarch64_cas4_rel | |

| 0.13% libc.so.6 [.] _int_malloc |

The locks take most of the CPU, atomic ops quite a bit (particularly a CAS – compare-and-swap – operation that resists contention poorly, since the operation might have to be attempted many times before succeeding), and even some in-kernel locks (futex, etc.). Approximately a year ago, during our initial x86 testing with library version 1.19, we observed this behavior, but did not conduct a thorough investigation at the time.

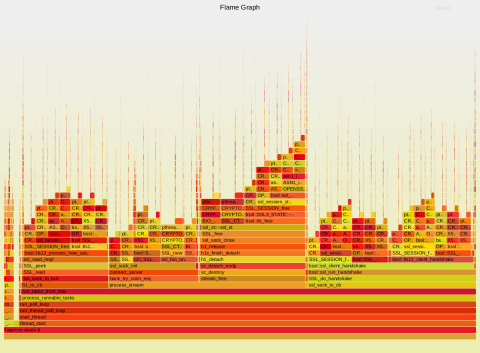

Digging into the flame graph reveals that it’s essentially the reference counting operations that cost a lot of locking:

With two libraries significantly affected by the cost of locking, we ran a new series of tests using HAProxy’s locks. (HAProxy was then rebuilt with USE_PTHREAD_EMULATION=1.)

The results were much better. OpenSSL 1.1.1 is now pretty much linear, reaching 124000 end-to-end connections per second, with a much cleaner performance profile, and less than 3% of CPU cycles spent in locks.

OpenSSL 1.1.1w:

| 7.52% libcrypto.so.1.1 [.] fe51_mul | |

| 6.47% libcrypto.so.1.1 [.] fe_mul | |

| 4.68% libcrypto.so.1.1 [.] sha512_block_armv8 | |

| 3.64% libcrypto.so.1.1 [.] fe51_sq | |

| 3.42% libcrypto.so.1.1 [.] x25519_scalar_mult | |

| 2.67% haproxy-openssl-1.1.1w-emu [.] pthread_rwlock_wrlock | |

| 2.48% libcrypto.so.1.1 [.] fe_sq | |

| 2.33% libc.so.6 [.] _int_malloc | |

| 2.04% libc.so.6 [.] _int_free | |

| 1.84% [kernel] [k] __wake_up_common_lock | |

| 1.83% libc.so.6 [.] cfree@GLIBC_2.17 | |

| 1.80% libc.so.6 [.] malloc | |

| 1.59% libcrypto.so.1.1 [.] OPENSSL_cleanse | |

| 1.10% [kernel] [k] el0_svc_common.constprop.0 | |

| 0.95% libcrypto.so.1.1 [.] cmov | |

| 0.91% libcrypto.so.1.1 [.] SHA512_Final | |

| 0.77% libc.so.6 [.] __memcpy_generic | |

| 0.77% libc.so.6 [.] __aarch64_swp4_rel | |

| 0.73% libc.so.6 [.] malloc_consolidate | |

| 0.71% [kernel] [k] kmem_cache_free |

OpenSSL 3.0.2 keeps the same structural defects but doesn’t collapse until 32 threads (compared to 12 previously), revealing more clearly how it uses its locks and atomic ops (96% locks).

OpenSSL 3.0.2:

| 77.58% haproxy-openssl-3.0.2-emu [.] pthread_rwlock_rdlock | |

| 18.02% haproxy-openssl-3.0.2-emu [.] pthread_rwlock_wrlock | |

| 0.51% libcrypto.so.3 [.] ossl_sa_doall_arg | |

| 0.39% haproxy-openssl-3.0.2-emu [.] pthread_rwlock_unlock | |

| 0.34% libcrypto.so.3 [.] OPENSSL_LH_doall_arg | |

| 0.27% libcrypto.so.3 [.] OPENSSL_LH_flush | |

| 0.26% libcrypto.so.3 [.] OPENSSL_LH_doall | |

| 0.23% libcrypto.so.3 [.] __aarch64_ldadd8_relax | |

| 0.13% libcrypto.so.3 [.] __aarch64_ldadd4_relax |

OpenSSL 3.0.14 maintains its (admittedly low) level until 64 threads, but this time with a performance of around 8000 connections per second, or slightly more than twice the performance with Pthread locks, also exhibiting an excessive use of locks (89% CPU usage).

OpenSSL 3.0.14:

| 60.18% haproxy-openssl-3.0.14-emu [.] pthread_rwlock_rdlock | |

| 28.69% haproxy-openssl-3.0.14-emu [.] pthread_rwlock_unlock | |

| 0.55% libcrypto.so.3 [.] fe51_mul | |

| 0.49% libcrypto.so.3 [.] fe_mul | |

| 0.46% libcrypto.so.3 [.] __aarch64_ldadd4_relax | |

| 0.33% libcrypto.so.3 [.] sha512_block_armv8 | |

| 0.27% libcrypto.so.3 [.] fe51_sq | |

| 0.26% libcrypto.so.3 [.] x25519_scalar_mult | |

| 0.26% libc.so.6 [.] _int_malloc | |

| 0.22% libc.so.6 [.] _int_free |

The latest OpenSSL versions replaced many locks with atomics, but these have become excessive, as can be seen below with __aarch64_ldadd4_relax() – which is an instruction typically used with reference counting and manual locking, and that still keeps using a lot of CPU.

OpenSSL 3.4.0-dev:

| 37.24% libcrypto.so.3 [.] __aarch64_ldadd4_relax | |

| 8.91% libcrypto.so.3 [.] evp_md_init_internal | |

| 8.68% libcrypto.so.3 [.] EVP_MD_CTX_copy_ex | |

| 7.18% libcrypto.so.3 [.] EVP_DigestUpdate | |

| 2.03% libcrypto.so.3 [.] fe51_mul | |

| 1.92% libcrypto.so.3 [.] EVP_DigestFinal_ex | |

| 1.78% libcrypto.so.3 [.] fe_mul | |

| 1.45% haproxy-openssl-3.4.0-dev-emu [.] pthread_rwlock_rdlock | |

| 1.43% haproxy-openssl-3.4.0-dev-emu [.] pthread_rwlock_unlock | |

| 1.22% libcrypto.so.3 [.] sha512_block_armv8 | |

| 1.09% libcrypto.so.3 [.] fe51_sq | |

| 0.86% libc.so.6 [.] _int_malloc | |

| 0.85% libcrypto.so.3 [.] x25519_scalar_mult | |

| 0.77% libc.so.6 [.] _int_free |

The WolfSSL curve doesn’t change at all; it clearly doesn’t need locks.

The AWS-LC curve goes much higher before collapsing (32 threads – 81000 connections per second), but still under heavy locking.

AWS-LC 1.29.0:

| 69.57% haproxy-aws-lc-v1.29.0-emu [.] pthread_rwlock_wrlock | |

| 4.80% haproxy-aws-lc-v1.29.0-emu [.] curve25519_x25519_byte_scalarloop | |

| 1.65% haproxy-aws-lc-v1.29.0-emu [.] curve25519_x25519base_byte_scalarloop | |

| 0.93% haproxy-aws-lc-v1.29.0-emu [.] pthread_rwlock_unlock | |

| 0.73% [kernel] [k] __wake_up_common_lock | |

| 0.52% libc.so.6 [.] _int_malloc | |

| 0.47% libc.so.6 [.] _int_free | |

| 0.45% haproxy-aws-lc-v1.29.0-emu [.] sha256_block_armv8 | |

| 0.41% haproxy-aws-lc-v1.29.0-emu [.] SHA256_Final |

A new flamegraph of AWS-LC was produced, showing much narrower spikes (which is unsurprising since the performance was roughly doubled).

Reference counting should normally not employ locks, so we reviewed the AWS-LC code to see if something could be improved. We discovered that there are, in fact, two implementations of the reference counting functions: a generic one relying on Pthread rwlocks, and a more modern one involving atomic operations supported since gcc-4.7, that’s only selected for compilers configured to adopt the C11 standard. This has been the default since gcc-5. Given that our tests were made with gcc-11.4, we should be covered. A deeper analysis revealed that the CMakeFile used to configure the project forces the standard to the older C99 unless a variable, CMAKE_C_STANDARD, is set.

Rebuilding the library with CMAKE_C_STANDARD=11 radically changed the performance and resulted in the topmost curves attributed to the -c11 variants of the library. This time, there is no difference between the regular build and the emulated locks, since the library no longer uses locks on the fast path. Now, just as with WolfSSL, performance scales linearly with the number of cores and threads. Now it is pretty visible that the library is more performant, reaching 183000 end-to-end connections per second at 64 threads – or about 20% higher than WolfSSL and 50% higher than OpenSSL 1.1.1w. The profile shows no more locks.

AWS-LC 1.29.0:

| 16.61% haproxy-aws-lc-v1.29.0-c11-emu [.] curve25519_x25519_byte_scalarloop | |

| 5.69% haproxy-aws-lc-v1.29.0-c11-emu [.] curve25519_x25519base_byte_scalarl | |

| 2.65% [kernel] [k] __wake_up_common_lock | |

| 1.60% libc.so.6 [.] _int_malloc | |

| 1.55% haproxy-aws-lc-v1.29.0-c11-emu [.] sha256_block_armv8 | |

| 1.53% [kernel] [k] el0_svc_common.constprop.0 | |

| 1.52% libc.so.6 [.] _int_free | |

| 1.36% haproxy-aws-lc-v1.29.0-c11-emu [.] SHA256_Final | |

| 1.27% libc.so.6 [.] malloc | |

| 1.22% haproxy-aws-lc-v1.29.0-c11-emu [.] OPENSSL_free | |

| 0.93% [kernel] [k] __fget_light | |

| 0.90% libc.so.6 [.] __memcpy_generic | |

| 0.89% haproxy-aws-lc-v1.29.0-c11-emu [.] CBB_flush |

This issue was reported to the AWS-LC project, which welcomed the report and fixed this oversight (mostly a problem of cat-and-mouse in the cmake-based build system).

Finally, modern versions of OpenSSL (3.1, 3.2, 3.3 and 3.4-dev) do not benefit much from the lighter locks. Their performance remains identical across all four versions, increasing from 25000 to 28000 connections per second with the lighter locks, reaching a plateau between 24 and 32 threads. That’s equivalent to 22.5% of OpenSSL 1.1.1, and 15.3% of AWS-LC’s performance. This definitely indicates that the contention is no longer concentrated to locks only and is now spread all over the code due to abuse of atomic operations. The problem stems from a fundamental software architecture issue rather than simple optimization concerns. A permanent solution will require rolling back to a lighter architecture that prioritizes efficient resource utilization and aligns with real-world application requirements.

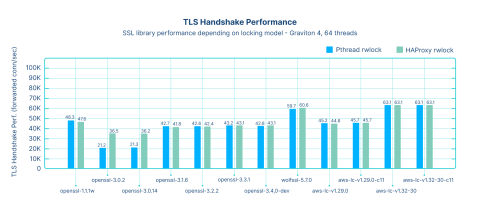

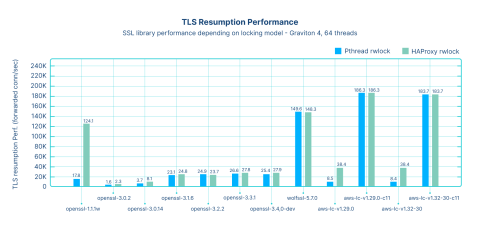

Performance summary per locking mechanism

The graph below shows how each library performs, in number of server handshakes per second (the numbers are expressed in thousands of connections per second).

With the exception of OpenSSL 3.0.x, the libraries are not affected by the locks during this phase, indicating that they are not making heavy use of them. The performance is roughly the same across all libraries, with the CPU-aware ones (AWS-LC and WolfSSL) at the top, followed by OpenSSL 1.1.1, then all versions of OpenSSL 3.x.

The following graph shows how the libraries perform for TLS resumption (the numbers are expressed in thousands of forwarded connections per second).

This test involves end-to-end connections, where the client establishes a connection to HAProxy, which then establishes a connection to the server. Preliminary handshakes had already been performed, and connections were resumed from a ticket, which explains why the numbers are much higher than in the previous test. OpenSSL 1.1.1w shows bad performance by default, due to a moderate use of locking; however, it became one of the best performers when lighter locks were used. OpenSSL 3.0.x versions exhibit extremely poor performance that can be improved only slightly by replacing the locks; at best, performance is doubled.

All OpenSSL 3.x versions remain poor performers, with locking being a small part of their problem. However, those who are stuck with this version can still benefit from our lighter locks by setting an HAProxy build option. The performance of the default build of aws-lc1.32 is also very low because it incorrectly detects the compiler and uses locks instead of atomic operations for reference counting. However, once properly configured, it becomes the best performer. WolfSSL is very good out of the box. Note that despite the wrong compilation option, AWS-LC is still significantly better than any OpenSSL 3.x version, even with OpenSSL 3.x using our lighter locks.

Future of SSL libraries

Unfortunately the future is not bright for OpenSSL users. After one of the most massive performance regressions in history, measurements show absolutely no more progress to overcome this issue over the last two years, suggesting that the ability for the team to fix this important problem has reached a plateau.

It is often said that fixing a problem requires smarter minds than those who created that problem. When the problem was architected by a team with strong convictions about the solution‘s correctness, it seems extremely unlikely that the resolution will come from the team that created that problem in the first place. The lack of progress in the latest releases tends to confirm these unfortunate hypotheses. The only path forward seems to be for the team to revert some of the major changes that plague the 3.x versions, but discussions suggest that this is out of the equation for them.

It is hard to guess what good or bad can emerge from a project in which technical matters are still decided by committees and votes, despite this anti-pattern being well known for causing more bad than good; bureaucracy and managers deciding against common sense usually doesn’t result in trustable solutions, since the majority is not necessarily right in technical matters. It also doesn’t appear that further changes are expected soon, as the project just reorganized, but kept its committees and vote-based decision process.

In early 2023 Rich Salz, one of the project’s leaders, indicated that the QuicTLS project was considering moving to the Apache Foundation via the Apache Incubator and potentially becoming Apache TLS. This has not happened. One possible explanation might be related to the difficulty in finding sufficient maintainers willing to engage long-term in such an arduous task. There’s probably also the realization that OpenSSL completely ruined their performance with versions 3 and above; that doesn’t make it very appealing for developers to engage with a new project that starts out crippled by a major performance flaw, and with the demonstrated inability of the team to improve or resolve the problems after two years. At IETF 120, the QuicTLS project leaders indicated that their goal is to diverge from OpenSSL, work in a similar fashion to BoringSSL, and collaborate with others.

AWS-LC looks like a very active project with a strong community. During our first encounter, there were a few rough edges that were quickly addressed. Even the recently reported performance issue was quickly fixed and released with the next version. Several versions were issued during the write-up of this article. This is definitely a library that anyone interested in the topic should monitor.

Recommendations for HAProxy users

What are the solutions for end users?

Regardless of the performance impact, if operating system vendors would ship the QuicTLS patch set applied on top of OpenSSL releases, that would help a lot with the adoption of QUIC in environments that are not sensitive to performance.

For users who want to test or use QUIC and don’t care about performance (i.e. the majority), HAProxy offers the limited-quic option that supports QUIC without 0-RTT on top of OpenSSL. For other users, including users of other products, building QuicTLS is easy and will provide a 100% OpenSSL compatible library that integrates seamlessly with any code.

Regarding the performance impact, those able to upgrade their versions regularly should adopt AWS-LC. The library integrates well with existing code, since it shares ancestry with BoringSSL, which itself is a fork of OpenSSL The team is helpful, responsive, and we have not yet found a meaningful feature of HAProxy’s SSL stack that is not compatible. While there is no official LTS branch, FIPS branches are maintained for 5 years, which can be a suitable alternative. For users on the cutting edge, it is recommended to periodically upgrade and rebuild their AWS-LC library.

Those who want to fine-tune the library for their systems should probably turn to WolfSSL. Its support is pretty good; however, given that it doesn’t have common ancestry with OpenSSL and only emulates its API, from time to time we discover minor differences. As a result, deploying it in a product requires a lot of testing and feature validation. There is a company behind the project, so it should be possible to negotiate a support period that suits both parties.

In the meantime, since we have not decided on a durable solution for our customers, we’re offering packages built against OpenSSL 1.1.1 with extended support and the QuicTLS patchset. This solution offers the best combination of support, features, and performance while we continue evaluating the SSL landscape.

The current state of OpenSSL 3.0 in Linux distributions forces users to seek alternative solutions that are usually not packaged. This means users no longer receive automatic security updates from their OS vendors, leaving them solely responsible for addressing any security vulnerabilities that emerge. As such, the situation has significantly undermined the overall security posture of TLS implementations in real-world environments. That’s not counting the challenges with 3.0 itself, which constitutes an easy DoS target, as seen above. We continue to watch news on this topic and to publish our updated findings and suggestions in the HAProxy wiki, which everyone is obviously encouraged to periodically check.

Hopes

We can only hope that the situation will clarify itself over time.

First, OpenSSL ought not to have tagged 3.0 as LTS, since it simply does not work for anything beyond command-line tools such as “openssl s_client” and Curl. We urge them to tag a newer release as LTS because, while the performance starting with 3.1 is still very far away from what users were having before the upgrade, we’re back into an area where it is usable for small sites. On top of this, the QuicTLS fork would then benefit from a usable LTS version with QUIC support, again for sites without high performance requirements.

OpenSSL has finally implemented its own QUIC API in 3.5-beta, ending a long-standing issue. However, this new API is not compatible with the standard one that other libraries and QUIC implementations have been using for years. It will require significant work to integrate existing implementations with this new QUIC API, and it is unlikely that many new implementations using the new QUIC API will emerge in the near future; as such, the relevance of this API is currently uncertain. Curl author Daniel Stenberg has a review of the announcement on his blog.

Second, in a world where everyone is striving to reduce their energy footprint, sticking to a library that operates at only a quarter of its predecessor's efficiency, and six to nine times slower than the competition, contradicts global sustainability efforts. This is not acceptable, and requires that the community unite in an effort to address the problem.

Both AWS-LC and QuicTLS seem to pursue comparable goals of providing QUIC, high performance, and good forward compatibility to their users. Maybe it would make sense for such projects to join efforts to try to provide users with a few LTS versions of AWS-LC that deliver excellent performance. It is clear that operating system vendors are currently lacking a long enough support commitment to start shipping such a library and that, once accepted, most SSL-enabled software would quickly adopt this, given the huge benefits that can be expected from these.

We hope that an acceptable solution will be found before OpenSSL 1.1.1 reaches the end of paid extended support. A similar situation happened around 22 years ago on Linux distros. There was a divergence between threading mechanisms and libraries; after a few distros started to ship the new NPTL kernel and library patches, it was progressively adopted by all distros, and eventually became the standard threading library. The industry likely needs a few distributions to lead the way and embrace an updated TLS library; this will encourage others to follow suit.

We consistently monitor announcements and engage in discussions with implementers to enhance the experience for our users and customers. The hope is that within a reasonable time frame, an efficient and well-maintained library, provided by default with operating systems and supporting all features including QUIC, will be available. Work continues in this direction with increased confidence that such a situation will eventually emerge, and steps toward improvement are noticeable across the board, such as OpenSSL's recent announcement of a maintenance cycle for a new LTS version every two years, with five years of support.

We invite you to stay tuned for the next update at our very own HAProxyConf in June, 2025, where we will usher in HAProxy’s next generation of TLS performance and compatibility.

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.