Integrations

Consul service mesh

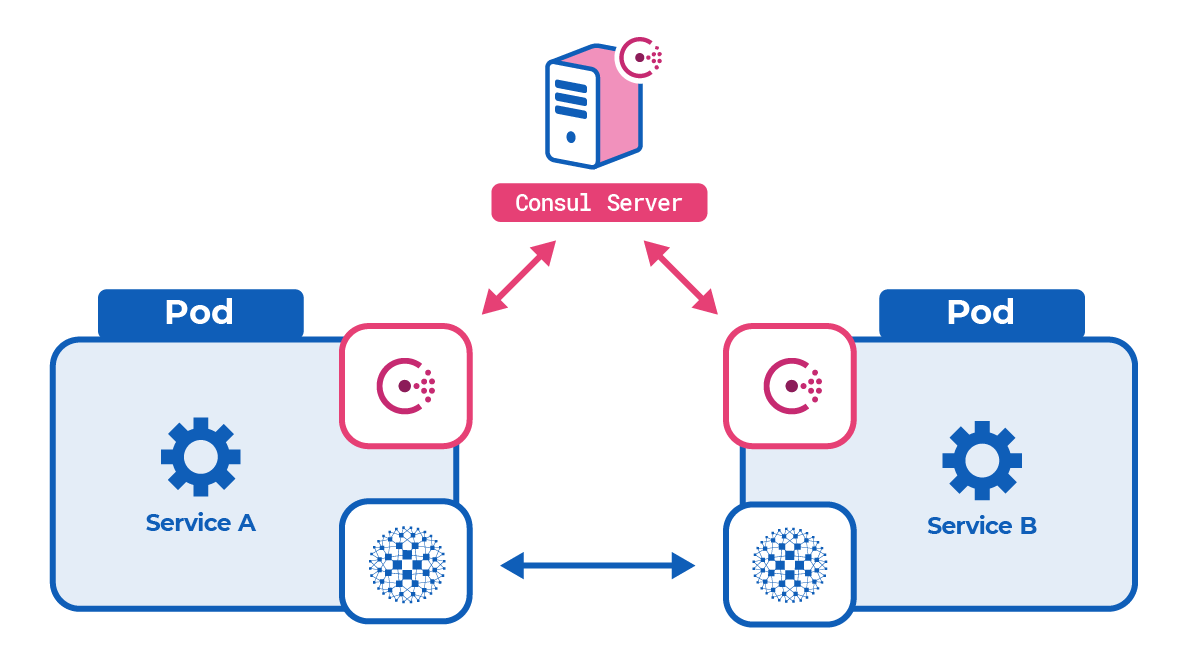

HashiCorp Consul operates as a service mesh when you enable its Connect mode. In this mode, Consul agents integrate with HAProxy Enterprise to form an interconnected web of proxies. Whenever one of your services needs to call another, their communication is relayed through the web, or mesh, with HAProxy Enterprise nodes passing messages between all services.

An HAProxy Enterprise node exists next to each of your services on both the caller and callee end. When a caller makes a request, they direct it to localhost where HAProxy Enterprise is listening. HAProxy Enterprise then relays it transparently to the remote callee. From the caller’s perspective, all services appear to be local, which simplifies the service’s configuration.

Deploy in Kubernetes Jump to heading

This section describes how to deploy the Consul service mesh with HAProxy Enterprise in Kubernetes.

Deploy the Consul servers Jump to heading

Consul agents running in server mode watch over the cluster and send service discovery information to each Consul client in the service mesh.

-

Deploy the Consul server nodes. In Kubernetes, you can install the Consul Helm chart.

nixhelm repo add hashicorp https://helm.releases.hashicorp.comhelm repo updatehelm install consul hashicorp/consul \--set global.name=consul \--set connect=truenixhelm repo add hashicorp https://helm.releases.hashicorp.comhelm repo updatehelm install consul hashicorp/consul \--set global.name=consul \--set connect=trueIf you are using a single-node Kubernetes cluster, such as minikube, then set the

server.replicasandserver.bootstrapExpectflags, as described in the guide Consul Service Discovery and Mesh on Minikube.nixhelm install consul hashicorp/consul \--set global.name=consul \--set connect=true \--set server.replicas=1 \--set server.bootstrapExpect=1nixhelm install consul hashicorp/consul \--set global.name=consul \--set connect=true \--set server.replicas=1 \--set server.bootstrapExpect=1 -

Create a file named

pod-reader-role.yamland add the following contents to it.This creates a Role and RoleBinding resource in your Kubernetes cluster that grant permissions to the Consul agents to read pod labels.

pod-reader-role.yamlyamlkind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata:namespace: defaultname: pod-readerrules:- apiGroups: [""]resources: ["pods"]verbs: ["get", "watch", "list"]---kind: RoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: read-podsnamespace: defaultsubjects:- kind: Username: system:serviceaccount:default:defaultapiGroup: rbac.authorization.k8s.ioroleRef:kind: Rolename: pod-readerapiGroup: rbac.authorization.k8s.iopod-reader-role.yamlyamlkind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata:namespace: defaultname: pod-readerrules:- apiGroups: [""]resources: ["pods"]verbs: ["get", "watch", "list"]---kind: RoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: read-podsnamespace: defaultsubjects:- kind: Username: system:serviceaccount:default:defaultapiGroup: rbac.authorization.k8s.ioroleRef:kind: Rolename: pod-readerapiGroup: rbac.authorization.k8s.io -

Deploy it with

kubectl apply:nixkubectl apply -f pod-reader-role.yamlnixkubectl apply -f pod-reader-role.yaml

Deploy your application Jump to heading

For each service that you want to include in the service mesh, you must deploy two extra containers into the same pod.

- container 1: your application

- container 2: Consul agent,

consul - container 3: HAProxy-Consul connector,

hapee-plus-registry.haproxy.com/hapee-consul-connect

The three containers (application, Consul, Consul-HAProxy Enterprise connector) are defined inside a single pod.

-

Use

kubectl create secretto store your credentials for the private HAProxy Docker registry, replacing<KEY>with your HAProxy Enterprise license key. You will pull thehapee-consul-connectcontainer image from this registry.nixkubectl create secret docker-registry regcred \--namespace=default \--docker-server=hapee-plus-registry.haproxy.com \--docker-username=<KEY> \--docker-password=<KEY>nixkubectl create secret docker-registry regcred \--namespace=default \--docker-server=hapee-plus-registry.haproxy.com \--docker-username=<KEY> \--docker-password=<KEY> -

Add the

haproxy-enterprise-consulandconsulcontainers to each of your Kubernetes Deployment manifests. In the example below, we deploy these two containers inside the same pod as a service namedexample-service.example-deployment.yamlyamlapiVersion: apps/v1kind: Deploymentmetadata:name: example-servicelabels:app: example-servicespec:replicas: 1selector:matchLabels:app: example-servicetemplate:metadata:labels:app: example-servicespec:imagePullSecrets:- name: regcredcontainers:- name: example-serviceimage: jmalloc/echo-server- name: haproxy-enterprise-consulimage: hapee-plus-registry.haproxy.com/hapee-consul-connectargs:- -sidecar-for=example-service- -enable-intentions- name: consulimage: consulenv:- name: CONSUL_LOCAL_CONFIGvalue: '{"service": {"name": "example-service","port": 80,"connect": {"sidecar_service": {}}}}'args: ["agent", "-bind=0.0.0.0", "-retry-join=provider=k8s label_selector=\"app=consul\""]example-deployment.yamlyamlapiVersion: apps/v1kind: Deploymentmetadata:name: example-servicelabels:app: example-servicespec:replicas: 1selector:matchLabels:app: example-servicetemplate:metadata:labels:app: example-servicespec:imagePullSecrets:- name: regcredcontainers:- name: example-serviceimage: jmalloc/echo-server- name: haproxy-enterprise-consulimage: hapee-plus-registry.haproxy.com/hapee-consul-connectargs:- -sidecar-for=example-service- -enable-intentions- name: consulimage: consulenv:- name: CONSUL_LOCAL_CONFIGvalue: '{"service": {"name": "example-service","port": 80,"connect": {"sidecar_service": {}}}}'args: ["agent", "-bind=0.0.0.0", "-retry-join=provider=k8s label_selector=\"app=consul\""]Note the following arguments for the

haproxy-enterprise-consulcontainer:Argument Description -sidecar-for example-serviceIndicates the name of the service for which to create an HAProxy Enterprise proxy. -enable-intentionsEnables Consul intentions, which HAProxy Enterprise enforces. Note the following arguments for the

consulcontainer:Argument Description agentRuns the Consul agent. -bind=0.0.0.0The address that should be bound to for internal cluster communications. -retry-join=provider=k8s label_selector="app=consul"Similar to -join, which specifies the address of another agent to join upon starting up (typically one of the Consul server agents), but allows retrying a join until it is successful. In Kubernetes, you set this toprovider=k8sand then include a label selector for finding the Consul servers. The Consul Helm chart adds the labelapp=consulto the Consul server pods.We’ve registered the

example-servicewith the Consul service mesh by setting an environment variable namedCONSUL_LOCAL_CONFIGin the Consul container. This defines the Consul configuration and registraton for the service. It indicates that the service receives requests on port 80.json{"service": {"name": "example-service","port": 80,"connect": {"sidecar_service": {}}}json{"service": {"name": "example-service","port": 80,"connect": {"sidecar_service": {}}} -

Deploy it with

kubectl apply:nixkubectl apply -f example-service.yamlnixkubectl apply -f example-service.yaml

Deploy a second application that calls the other Jump to heading

The example-service from the previous section is published to the service mesh where other services within the mesh can call it. To define a service that calls another, add a proxy section to the connect.sidecar_service section of the Consul container’s configuration.

In the example below, the service named app-ui adds the example-service as an upstream service, which makes it available at localhost at port 3000 inside the pod.

app-ui-deployment.yamlyaml

app-ui-deployment.yamlyaml

Note that we set the environment variable named CONSUL_LOCAL_CONFIG in the Consul container to register this service with the service mesh. It declares that it has an upstream dependency on the example-service service.

Optional: Publish the web dashboard Jump to heading

The Helm chart creates a Kubernetes service named consul-server that exposes a web dashboard on port 8500. To make it available outside of the Kubernetes cluster, you can forward the port via the HAProxy Enterprise Kubernetes Ingress Controller:

-

Deploy the HAProxy Enterprise Kubernetes Ingress Controller into your Kubernetes cluster.

-

Create a file named

consul-server-ingress.yamlthat defines an Ingress resource for the Consul service.In this example, we define a host-based rule that routes all requests for

consul.test.localto theconsul-serverservice at port 8500.consul-server-ingress.yamlyamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata:name: consul-server-ingressspec:rules:- host: consul.test.localhttp:paths:- path: "/"pathType: Prefixbackend:service:name: consul-serverport:number: 8500consul-server-ingress.yamlyamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata:name: consul-server-ingressspec:rules:- host: consul.test.localhttp:paths:- path: "/"pathType: Prefixbackend:service:name: consul-serverport:number: 8500 -

Deploy it using

kubectl apply:nixkubectl apply -f consul-server-ingress.yamlnixkubectl apply -f consul-server-ingress.yaml -

Add an entry to your system’s

/etc/hostsfile that maps theconsul.test.localhostname to the IP address of your Kubernetes cluster. If you are using minikube, you can get the IP address of the node withminikube ip. Below is an example/etc/hostsfile:text192.168.99.125 consul.test.localtext192.168.99.125 consul.test.local -

Use

kubectl get serviceto check which port the ingress controller has mapped to port 80. In the example below, port 80 is mapped to port 30624.nixminikube ipnixminikube ipoutputtext192.168.99.120outputtext192.168.99.120nixkubectl get service kubernetes-ingressnixkubectl get service kubernetes-ingressoutputtextNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes-ingress NodePort 10.110.104.60 <none> 80:30624/TCP,443:31147/TCP,1024:31940/TCP 7m40soutputtextNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes-ingress NodePort 10.110.104.60 <none> 80:30624/TCP,443:31147/TCP,1024:31940/TCP 7m40sOpen a browser window and go to the

consul.test.localaddress at that port, e.g.consul.test.local:30624.

Optional: Enable Consul ACLs Jump to heading

In Consul, ACLs are a security measure that requires Consul agents to present an authentication token before they can join the cluster or call API methods.

-

When installing Consul, set the

global.acls.manageSystemACLsflag to true to enable ACLs.nixhelm install consul hashicorp/consul \--set global.name=consul \--set connect=true \--set global.acls.manageSystemACLs=truenixhelm install consul hashicorp/consul \--set global.name=consul \--set connect=true \--set global.acls.manageSystemACLs=true -

Install

jq, a command-line utility for processing JSON data. It provides a simple and powerful way to filter, format, and transform JSON data structures.nixsudo apt install jqnixsudo apt install jqnixsudo yum install jqnixsudo yum install jq -

Use

kubectl get secretto get the auto-generated bootstrap token, which is base64 encoded.nixkubectl get secret consul-bootstrap-acl-token -o json | jq -r '.data.token' | base64 -dnixkubectl get secret consul-bootstrap-acl-token -o json | jq -r '.data.token' | base64 -doutputtext8f1c8c5e-d0fb-82ff-06f4-a4418be245dcoutputtext8f1c8c5e-d0fb-82ff-06f4-a4418be245dcUse this token to log into the Consul web UI.

-

In the Consul web UI, go to ACL > Policies and select the client-token row. Change the policy’s value so that the service_prefix section has a policy of

write:hclnode_prefix "" {policy = "write"}service_prefix "" {policy = "write"}hclnode_prefix "" {policy = "write"}service_prefix "" {policy = "write"} -

Go back to the ACL screen and select the client-token row. Copy this token value (e.g.

f62a3058-e139-7e27-75a0-f47df9e2e4bd). -

For each of your services, update your Deployment manifest so that the

haproxy-enterprise-consulcontainer includes the-tokenargument, set to the client-token value.yaml- name: haproxy-enterprise-consulimage: hapee-plus-registry.haproxy.com/hapee-consul-connectargs:args:- -sidecar-for=app-ui- -enable-intentions- -token=f62a3058-e139-7e27-75a0-f47df9e2e4bdyaml- name: haproxy-enterprise-consulimage: hapee-plus-registry.haproxy.com/hapee-consul-connectargs:args:- -sidecar-for=app-ui- -enable-intentions- -token=f62a3058-e139-7e27-75a0-f47df9e2e4bd -

Update the

consulcontainer’s configuration to include anaclsection where you will specify the same client-token value. Also, setprimary_datacentertodc1(or to the value you’ve set for your primary datacenter, if you have changed it).yaml- name: consulimage: consulenv:- name: CONSUL_LOCAL_CONFIGvalue: '{"primary_datacenter": "dc1","acl": {"enabled": true,"default_policy": "allow","down_policy": "extend-cache","tokens": {"default": "f62a3058-e139-7e27-75a0-f47df9e2e4bd"}},"service": {"name": "example-service","port": 80,"connect": {"sidecar_service": {}}}}'yaml- name: consulimage: consulenv:- name: CONSUL_LOCAL_CONFIGvalue: '{"primary_datacenter": "dc1","acl": {"enabled": true,"default_policy": "allow","down_policy": "extend-cache","tokens": {"default": "f62a3058-e139-7e27-75a0-f47df9e2e4bd"}},"service": {"name": "example-service","port": 80,"connect": {"sidecar_service": {}}}}'

Do you have any suggestions on how we can improve the content of this page?